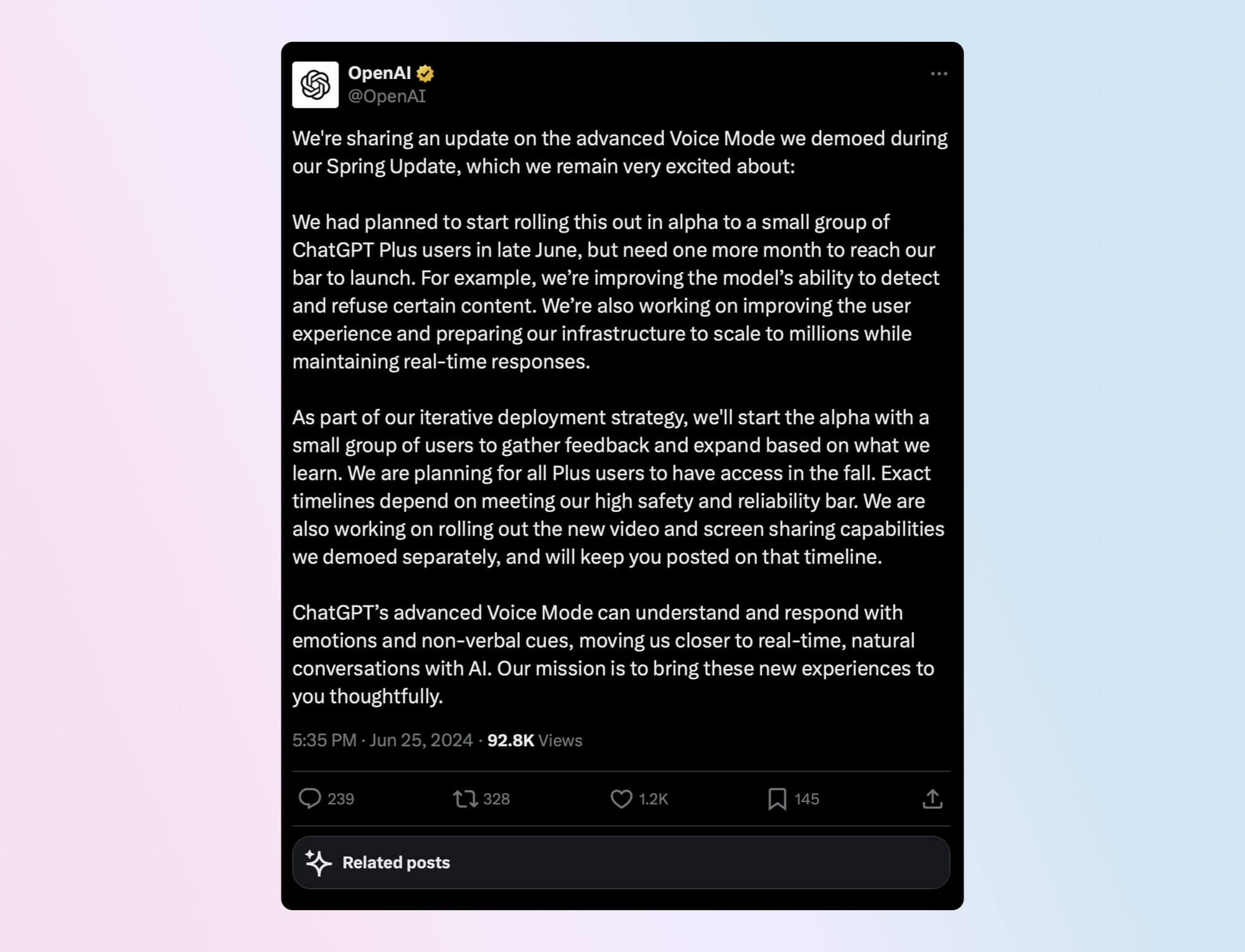

OpenAI is pushing back the launch of ChatGPT's new Advanced Voice Mode, citing the need for further improvements. The company had planned to start rolling out this feature to a small group of ChatGPT Plus users in late June, but now says it needs another month to meet its standards.

The new Advanced Voice Mode, powered by the GPT-4o model, is supposed to bring AI conversations closer to natural human dialogue. When unveiled at OpenAI's Spring Update event last month, the feature showcased the ability to understand and respond with emotions and non-verbal cues. It also demonstrated impressive speed, with response times as low as 232 milliseconds.

The delay stems from ongoing work on two key areas: safety and user experience. OpenAI wants to improve the model's ability to detect and refuse certain types of content, likely to prevent misuse and ensure responsible AI interactions. They're also focusing on improving the user experience and preparing their systems to handle millions of users while maintaining real-time responses.

Earlier this month, we covered the announcement of a new partnership between OpenAI, Microsoft, and Oracle that will allow OpenAI to leverage OCI to extend the Microsoft Azure AI platform. This will likely play a big role in helping to provide them with much-needed capacity to scale access to their models.

Despite the setback, OpenAI remains committed to a broad rollout. They plan to start with a small alpha test group and gradually expand based on feedback. The company still expects all ChatGPT Plus users to have access by fall, though exact timelines depend on meeting their safety and reliability benchmarks.

This cautious approach aligns with growing industry awareness of the need for responsible AI development. As AI systems become more advanced and human-like, ensuring they operate safely and ethically becomes increasingly crucial.

While ChatGPT is already using the new multimodal GPT-4o model, it only has access to images. OpenAI says they will release the video and screen-sharing capabilities separately, however, they haven't provided specific timelines.

While some users are expressing disappointment over the delay, OpenAI's focus on getting things right before launch makes sense. Hopefully when Advanced Voice Mode and the new multipmodal capabilities do arrive, it will be a more polished and safer experience.