OpenAI has revealed it has disrupted a covert Iranian influence operation (IO) that was utilizing its ChatGPT service to generate content related to various topics, including the U.S. presidential campaign.

The company shared that they identified and banned a group of ChatGPT accounts linked to an operation known as Storm-2035—see this Microsoft Threat Intelligence Report for more details. This operation was using the AI assistant to produce articles and social media posts on subjects ranging from U.S. politics to global events, which were then distributed across multiple platforms.

According to OpenAI, the operation relied on five websites posing as both progressive and conservative news outlets, with content produced by ChatGPT. The generated material mainly covered topics such as the conflict in Gaza, Israel's Olympic participation, and U.S. election candidates. Additional content focused on Venezuelan politics, Latinx rights in the U.S., and Scottish independence.

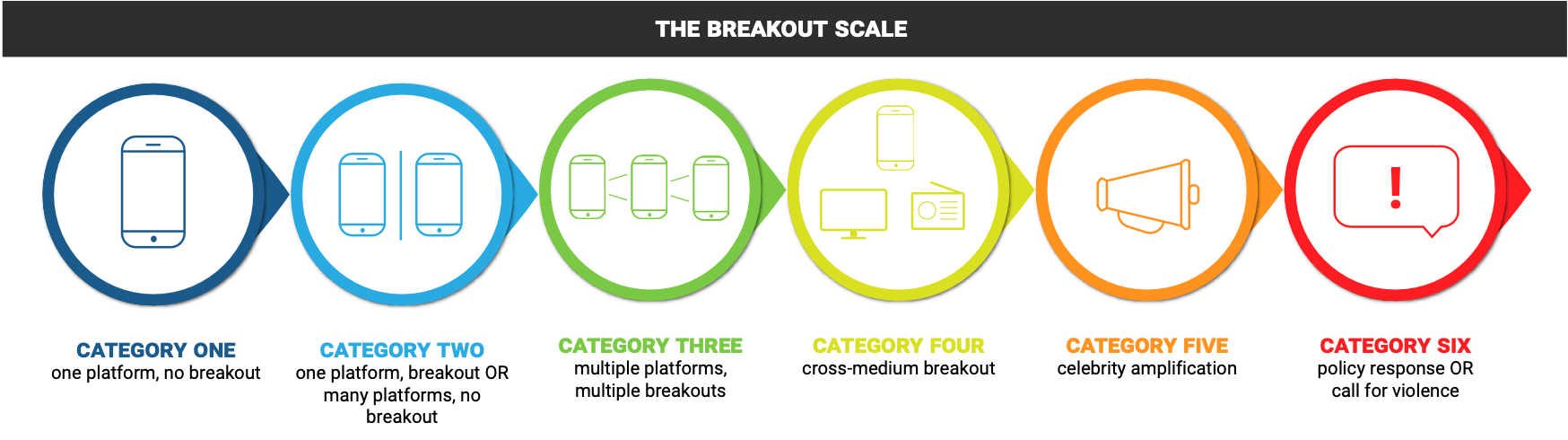

Despite these efforts, OpenAI states that the operation appears to have gained little traction. Most of the social media posts received minimal engagement, with few likes, shares, or comments. The company categorized the operation's impact as low (Category 2), based on the Brookings' Breakout Scale for assessing influence operations. This means that the IO involved activity on multiple platforms, but there is no evidence that real people picked up or widely shared their content.

The investigation revealed that the operators used ChatGPT in two main ways: generating long-form articles for fake news websites and creating shorter comments for social media platforms. OpenAI identified a dozen accounts on X (formerly Twitter) and one on Instagram involved in this operation.

In response to this discovery, OpenAI has not only banned the associated accounts but also shared threat intelligence with relevant government, campaign, and industry stakeholders. The company emphasized its commitment to preventing abuse and improving transparency around AI-generated content, especially in the context of upcoming elections worldwide.

Although the Iranian operation failed to achieve significant influence, OpenAI’s actions highlight the growing challenges of addressing AI-driven disinformation campaigns. The company emphasized the importance of transparency, responsible AI usage, and cross-industry collaboration to curb such attempts, especially as the 2024 elections approach.