OpenAI just released two new open-weight models—gpt-oss-120b and gpt-oss-20b—that match or outperform similarly sized proprietary models in reasoning, tool use, and health performance. They’re optimized for real-world deployment, and you can run the smaller one on a laptop.

Key Points

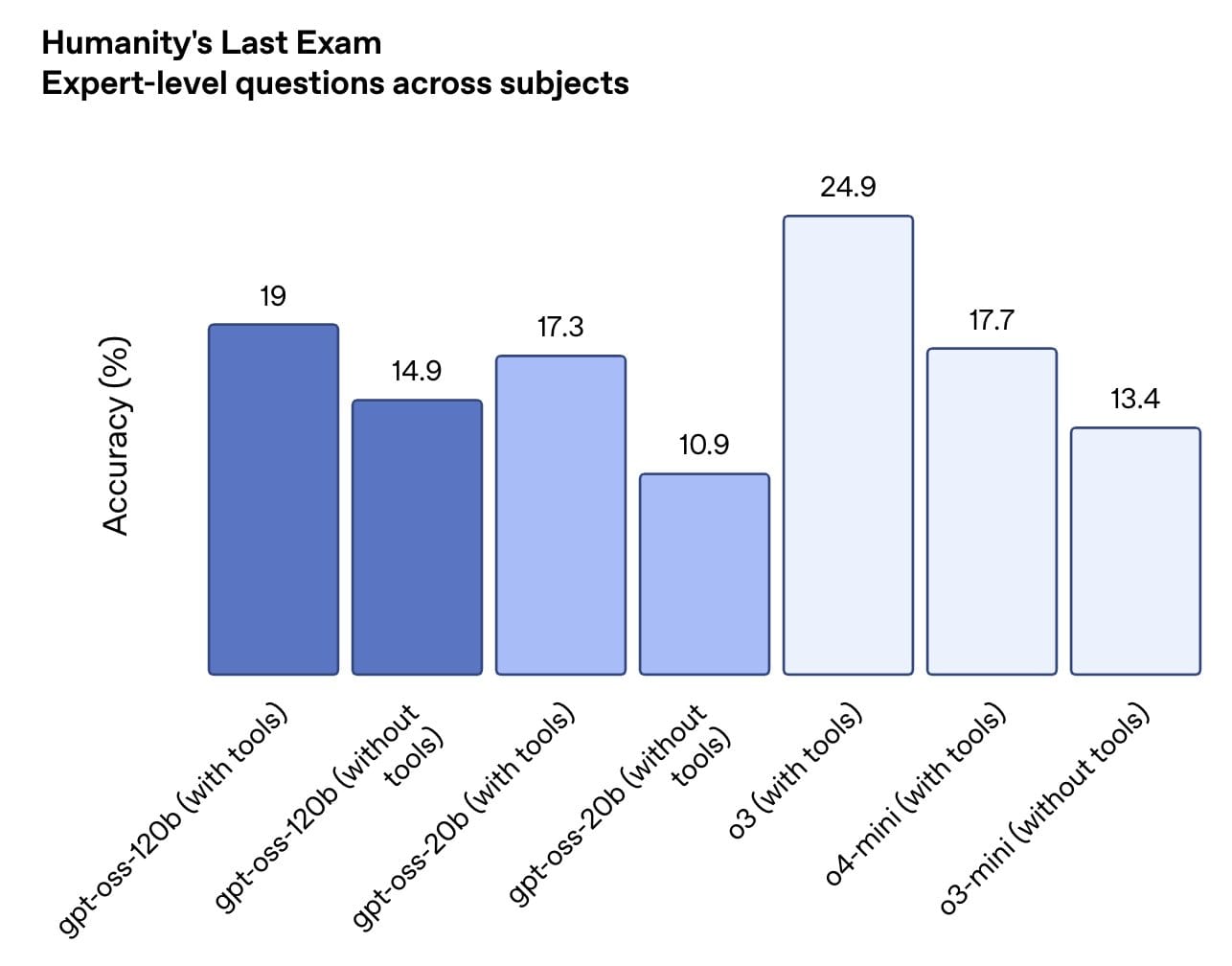

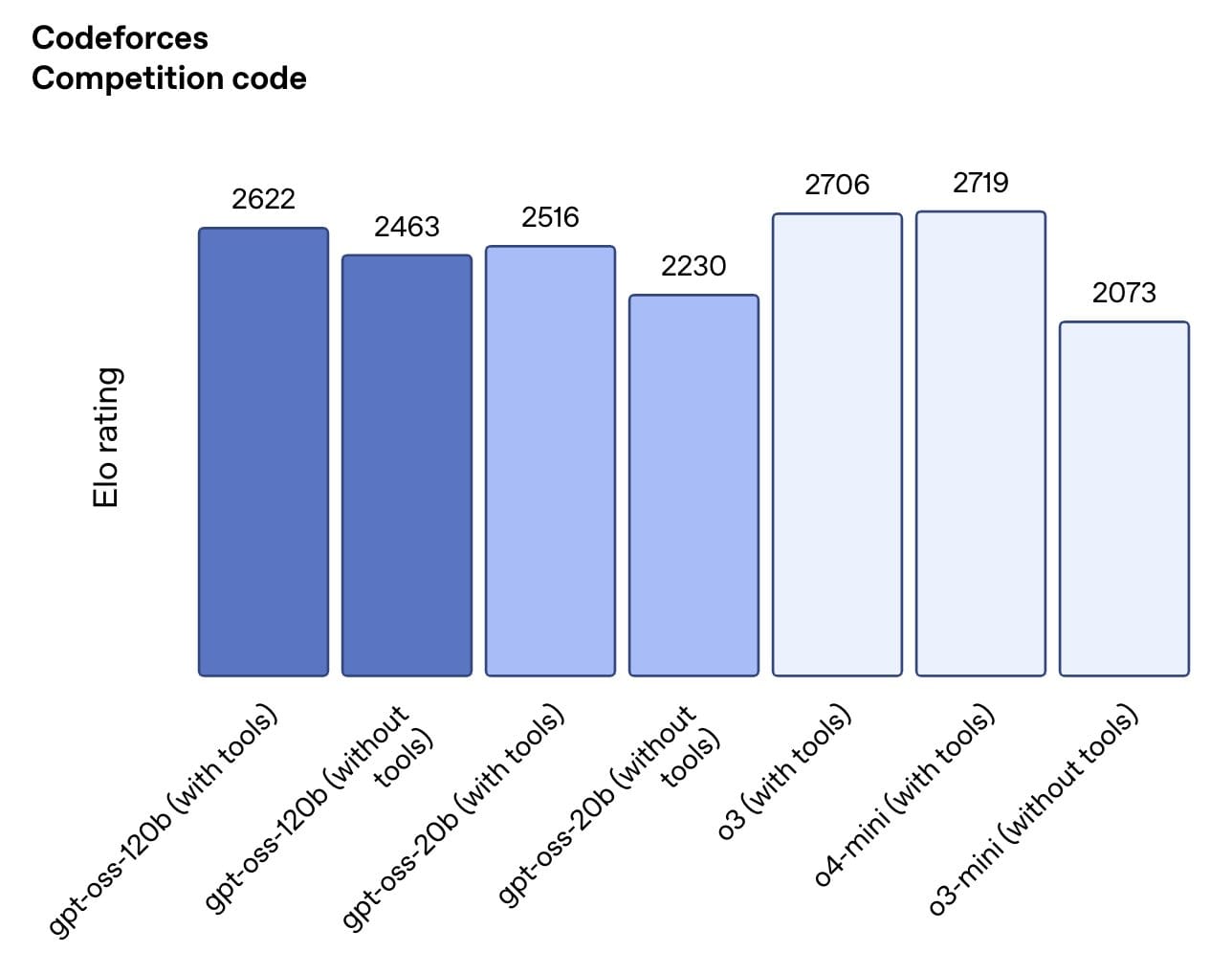

- gpt-oss-120b ≈ o4-mini on reasoning benchmarks

- gpt-oss-20b runs on laptops, rivals o3-mini

- Fully open under Apache 2.0

- Robust safety evals + $500K red teaming challenge

OpenAI just gave the open-weight AI ecosystem a major upgrade. Their newly released models, gpt-oss-120b and gpt-oss-20b, outperform most open models—and in some areas, even rival OpenAI’s own proprietary reasoning models. And they’re free to use under Apache 2.0.

gpt-oss is a big deal; it is a state-of-the-art open-weights reasoning model, with strong real-world performance comparable to o4-mini, that you can run locally on your own computer (or phone with the smaller size). We believe this is the best and most usable open model in the…

— Sam Altman (@sama) August 5, 2025

The flagship gpt-oss-120b runs on a single 80GB GPU and performs close to o4-mini on reasoning, tool use, and health-related tasks. The smaller gpt-oss-20b runs on devices with just 16GB of memory and beats o3-mini in several domains.

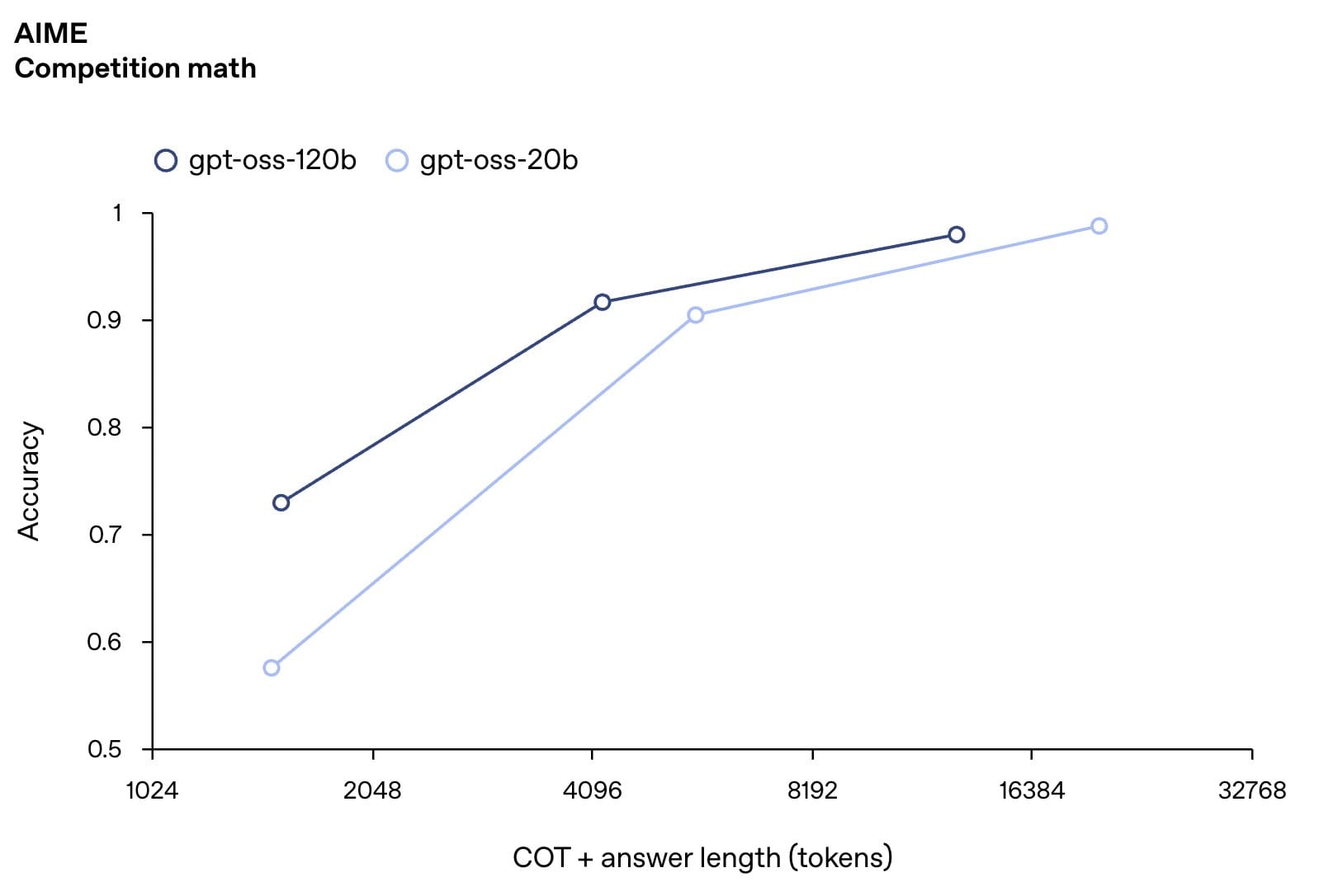

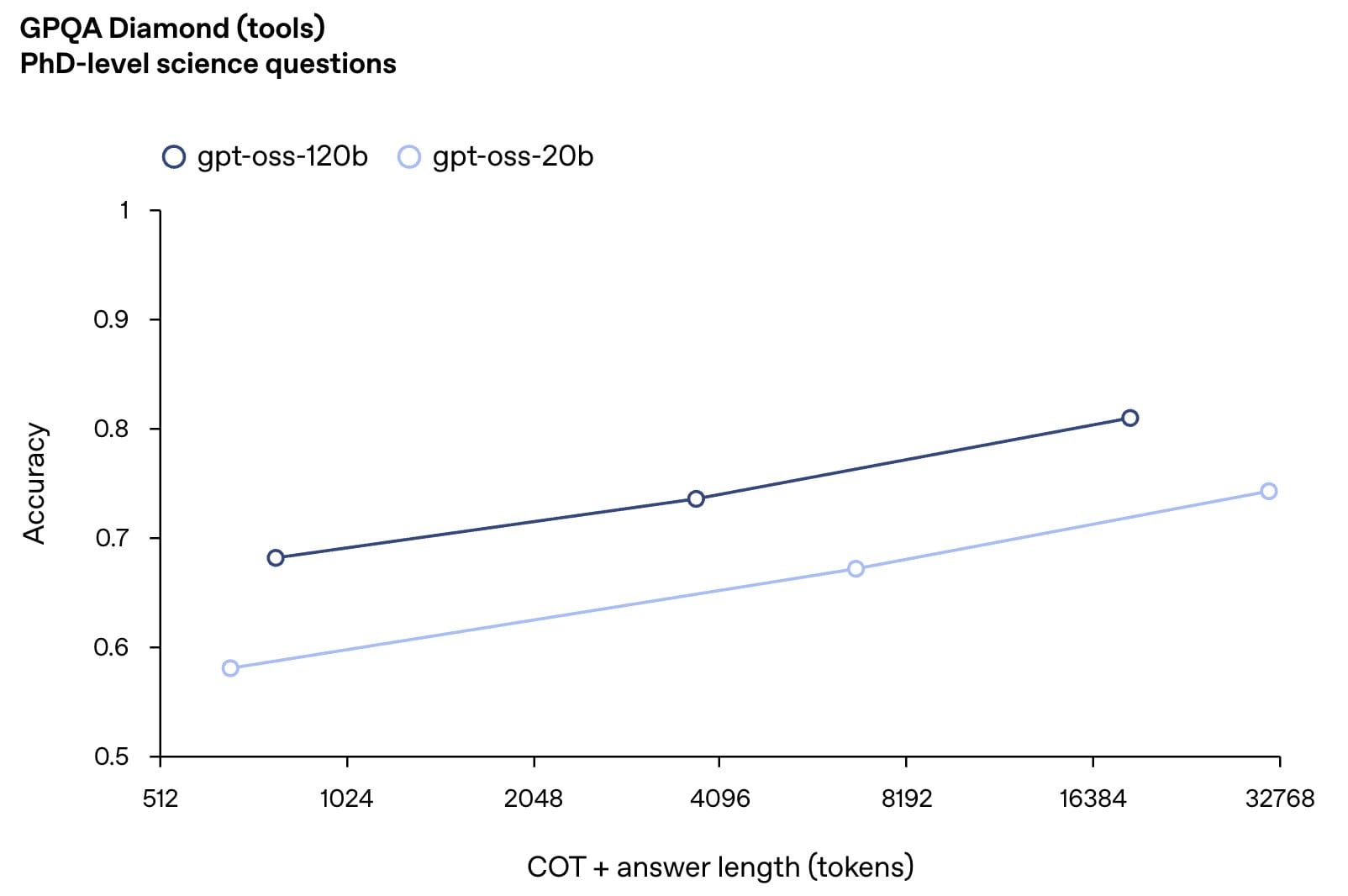

Both models are optimized for agentic workflows like tool use, structured outputs, and chain-of-thought reasoning. Developers can configure the level of reasoning effort—low, medium, or high—with a simple system prompt, balancing latency and performance.

OpenAI has also raised the bar for safety. In addition to standard refusal and jailbreak resistance tests, they subjected gpt-oss-120b to worst-case fine-tuning in bio and cyber domains. Even under strong adversarial training using OpenAI’s internal RL stack, the model did not reach high-risk thresholds on their Preparedness Framework.

The timing isn't coincidental. Chinese AI companies like DeepSeek have been eating OpenAI's lunch in the open-source space, releasing powerful models that cost a fraction of what American companies spend to develop similar capabilities. DeepSeek's R1 model claims to match OpenAI's performance while costing just $6 million to train compared to OpenAI's $100 million for GPT-4.

Sam Altman admitted in January that OpenAI had been "on the wrong side of history" when it comes to open-sourcing technology. Now they're doing something about it.

We’re launching a $500K Red Teaming Challenge to strengthen open source safety.

— OpenAI (@OpenAI) August 5, 2025

Researchers, developers, and enthusiasts worldwide are invited to help uncover novel risks—judged by experts from OpenAI and other leading labs.https://t.co/EQfmJ39NZD

To support responsible development, OpenAI is publishing a full model card, developer guides, and a safety research paper. There’s also a $500K Red Teaming Challenge and a six-week hackathon with Hugging Face, NVIDIA, and others.

Open weight models continue to be critical to developers and startups around the world—especially in regions with limited infrastructure—this means access to top-tier open models without the compute burden or licensing friction. This is a welcomed move and a good look for OpenAI.