OpenAI is taking steps to address the growing concern over the potential misuse of AI-generated content, particularly in the context of the upcoming elections. The company has announced two key initiatives to help society navigate the challenges posed by generative AI.

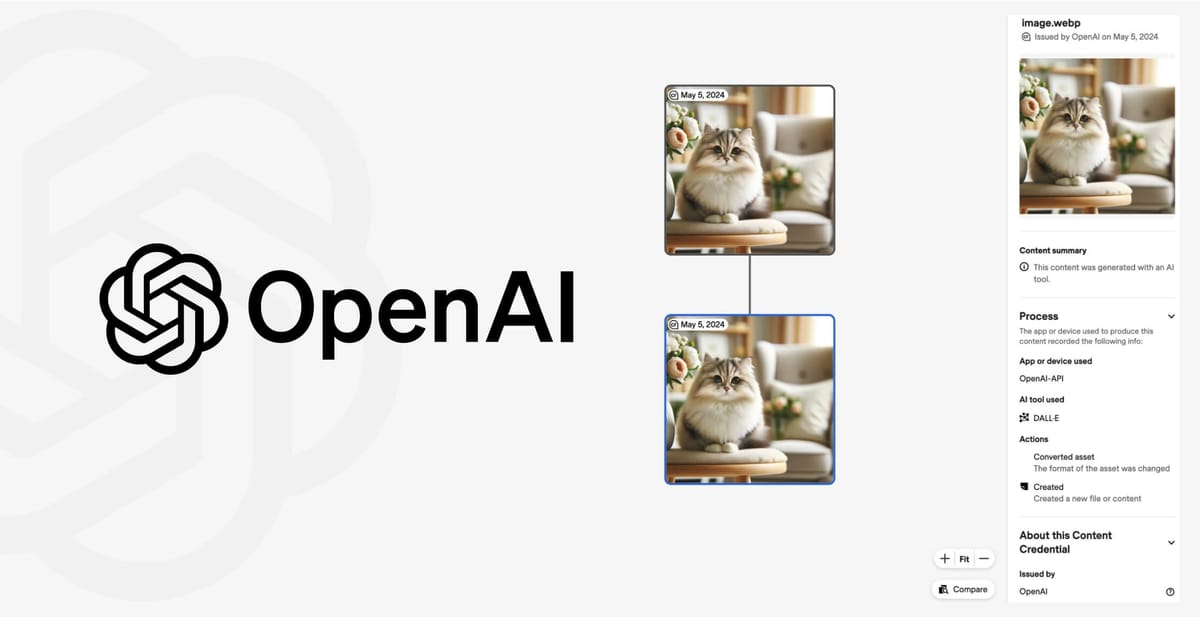

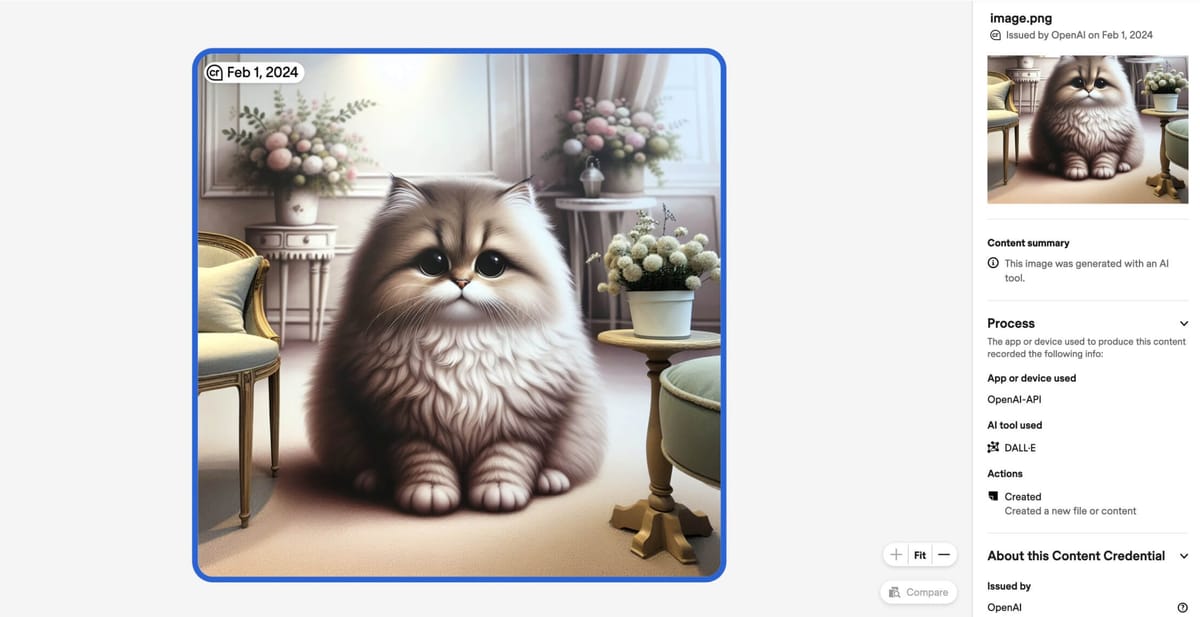

Firstly, OpenAI is joining the Coalition for Content Provenance and Authenticity (C2PA) Steering Committee. C2PA is an industry-wide effort to develop a standard for digital content certification, which can help users verify the origin and authenticity of online content. OpenAI has already begun adding C2PA metadata to images created by its DALL·E 3 model and plans to do the same for its upcoming video generation model, Sora.

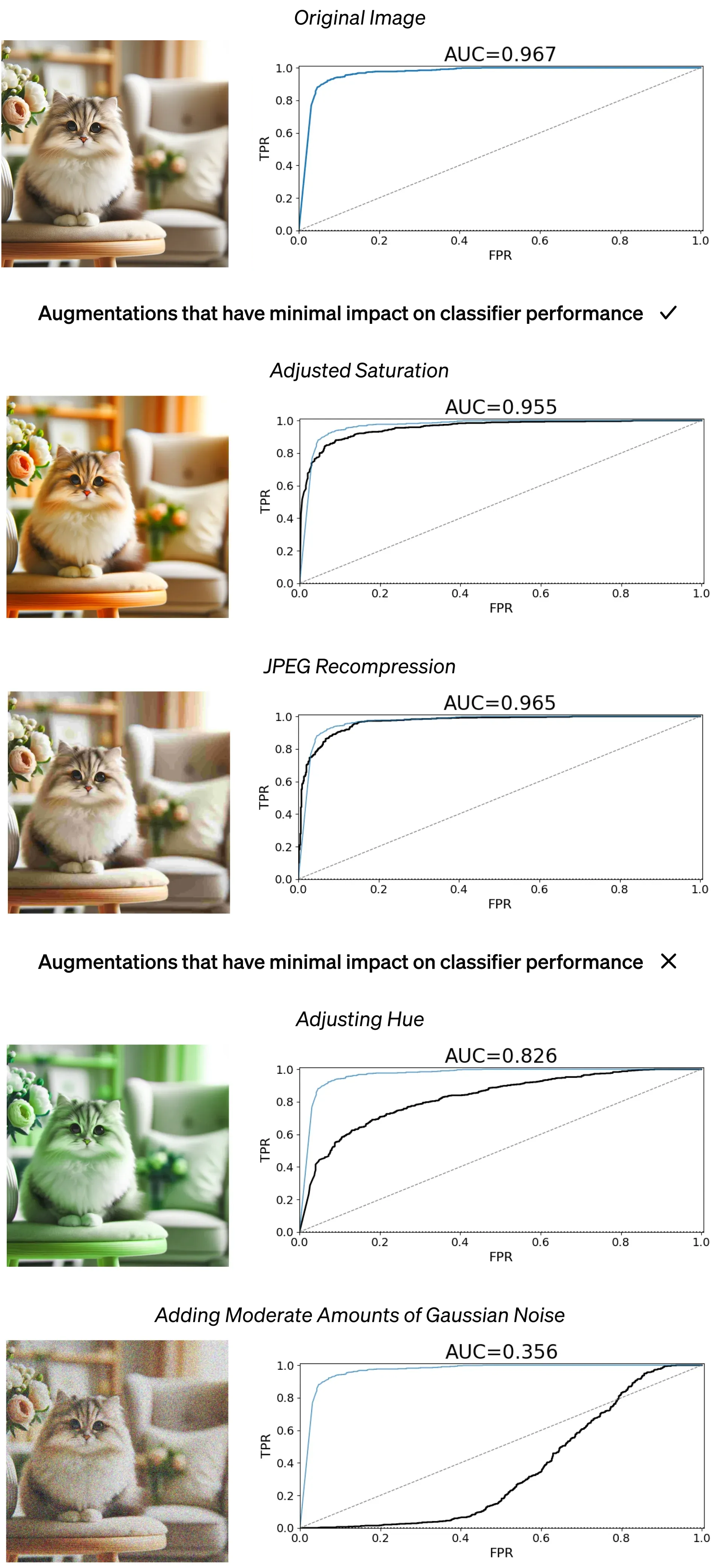

Secondly, the company is developing its own tools to identify content created by its services. This includes a classifier that predicts the likelihood of an image being generated by DALL·E 3. The tool, which is being made available to a select group of researchers for testing and feedback, has shown high accuracy (an impressive accuracy of 98.8%) in distinguishing between AI-generated and non-AI-generated images.

Deepfakes, or AI-generated fake content, have already impacted political campaigning and voting in various countries, including Slovakia, Taiwan, and India. With elections on the horizon, experts warn that images, audio, and video generated by artificial intelligence could sway public opinion and influence election outcomes.

While this is a step forward, OpenAI acknowledges that this is just a small part of the battle against deepfakes, which are becoming increasingly sophisticated and widespread. The classifier's performance in distinguishing between images generated by different AI models is currently lower, and certain modifications can reduce its effectiveness. The company emphasizes the need for collective action from platforms, content creators, and intermediate handlers to facilitate the retention of metadata and promote transparency.

The company has previously acknowledged the potential for misuse of its Voice Engine technology that can recreate human voices with startling accuracy with just 15 seconds of source audio. To address this, access to Voice Engine is currently limited to trusted partners as a research preview. Additionally, they have embedded audio watermarking into generated content which acts as a form of fraud detection, making it easier to identify AI-generated audio.

OpenAI's efforts are part of a broader industry initiative to combat the spread of misleading AI-generated content. As Sandhini Agarwal, an OpenAI researcher, notes, "there is no silver bullet" in the fight against deepfakes. Nonetheless, these tools represent an important step towards promoting authenticity and trust in the ever-evolving digital landscape.