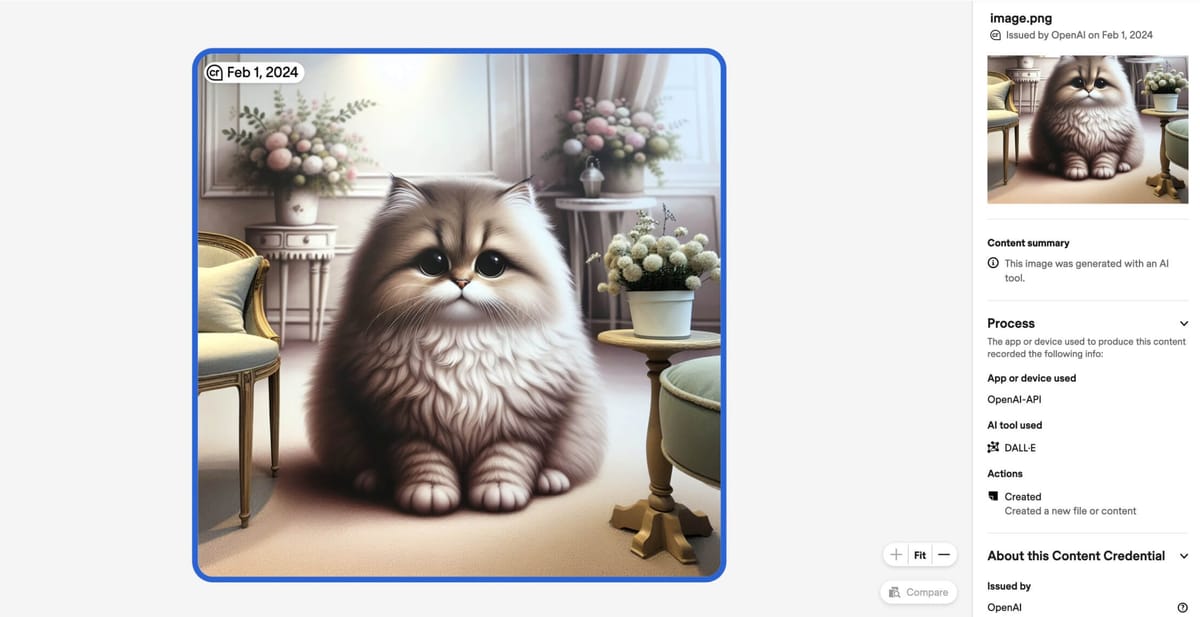

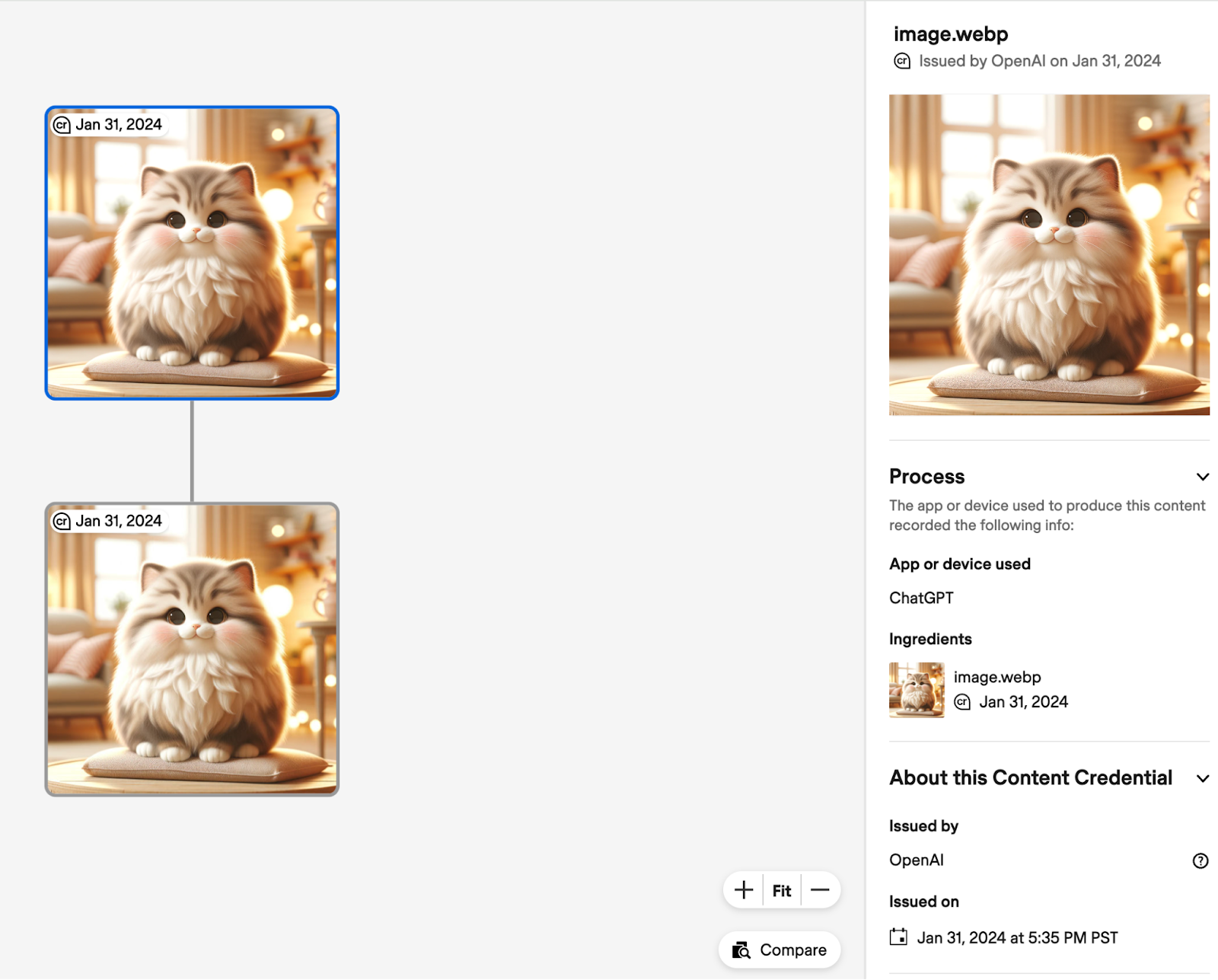

Following a similar announcement from Meta earlier today, OpenAI has updated its policies to specify that images created with DALL-E 3 will now include embedded metadata confirming they were AI-generated. The metadata standards come from the Coalition for Content Provenance and Authenticity (C2PA), an organization developing technology to certify the source and history of media content.

You probably haven't heard about C2PA, but its impact might soon be felt across the digital world. It's a Joint Development Foundation project, formed through an alliance between Adobe, Arm, Intel, Microsoft and Truepic.

C2PA unifies the efforts of the Adobe-led Content Authenticity Initiative (CAI) which focuses on systems to provide context and history for digital media, and Project Origin, a Microsoft- and BBC-led initiative that tackles disinformation in the digital news ecosystem.

OpenAI says images generated through both its API and within ChatGPT will contain C2PA metadata tags confirming the content came from DALL-E 3 models. This change will roll out to all mobile users by February 12th.

There is a small file size increase from adding C2PA data to images—about 3-5% for images generated with the API and 32% when using ChatGPT. OpenAI says this will have a "negligible effect on latency and will not affect the quality of the image generation."

To be clear, C2PA isn't just for AI - camera companies like Leica and news organizations like the AP are also adopting it. The open standard allows any publisher or creator to embed certifiable information about an image's origins within the file itself. For AI-generated content, this enables better attribution and aims to limit misuse. You can use websites like Content Credentials Verify to check the metadata of an image.

OpenAI cautions that C2PA has limitations: "Metadata like C2PA is not a silver bullet to address issues of provenance. It can easily be removed either accidentally or intentionally." For example, social media platforms often strip image metadata, as do common actions like taking screenshots. However, there are more robust tamper-proof digital watermarking technologies that are quickly being adopted by companies also.