OpenAI’s most powerful model just got a major upgrade—and a massive price cut. Today, the company announced that o3-pro, its flagship model, is now available to ChatGPT Pro and Team users, and to developers via the API at a fraction of the previous cost.

Key Points:

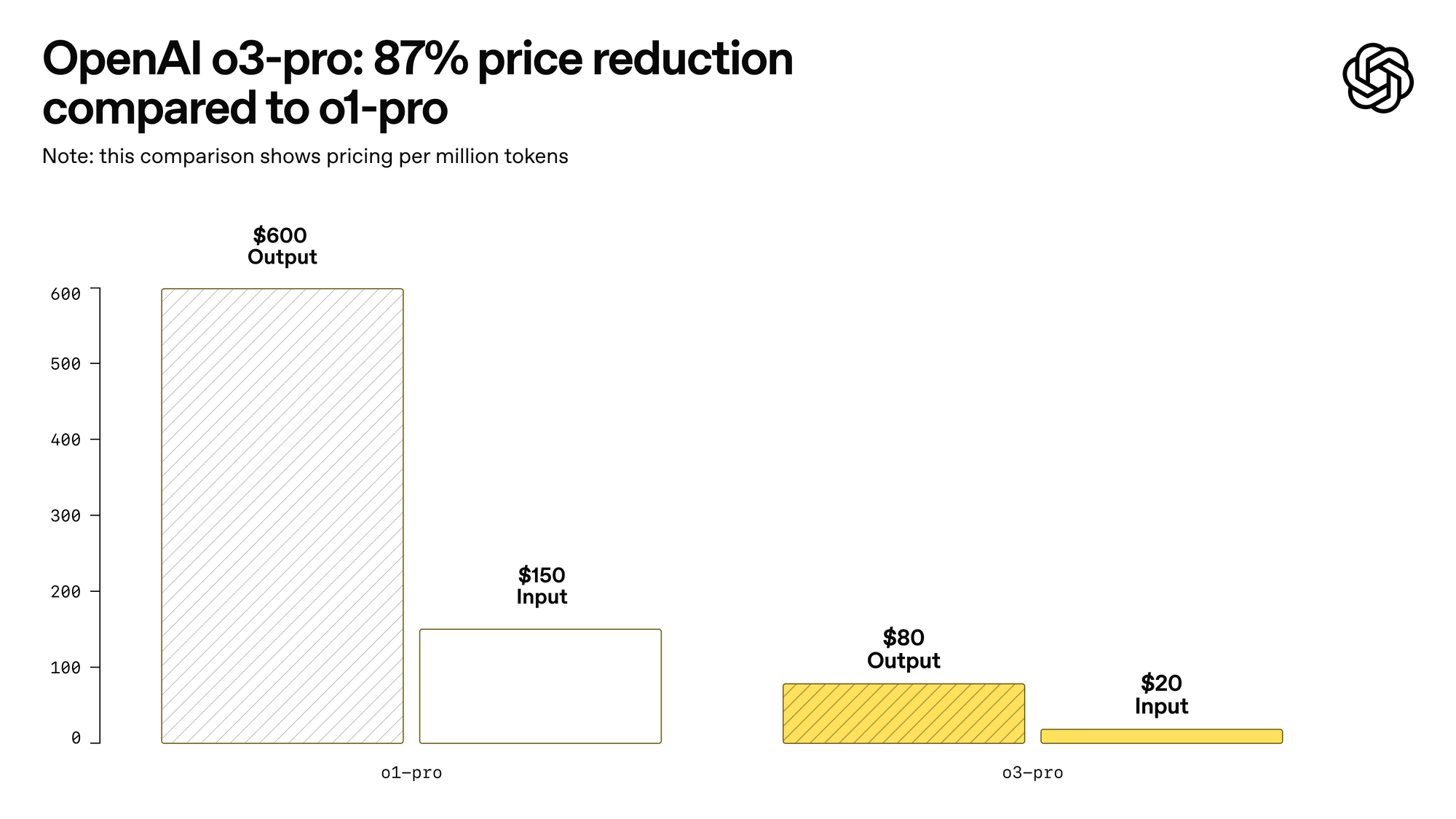

- Massive price cuts: o3-pro costs $20 for input and $80 for output per million tokens (87% cheaper than o1-pro), while the base o3 model dropped 80% to just $2/$8 per million tokens

- Enhanced reasoning: Expert evaluations consistently prefer o3-pro over the standard o3 model across all categories, especially in science, programming, and business tasks

- Tool integration: Unlike previous reasoning models, o3-pro can search the web, analyze files, run Python code, and remember previous conversations, though responses take longer to complete

If you’re a Pro or Team user in ChatGPT, o3-pro just replaced o1-pro in the model picker. And if you’re a developer working with OpenAI’s API, you can now access o3-pro at a steep discount: it’s 87% cheaper than o1-pro. They also brought down the price of o3 by 80%. That’s not a typo. OpenAI is clearly aiming to make frontier-level performance far more accessible.

While reasoning models like o1 and o3 have delivered impressive results on complex tasks—think PhD-level science questions and competitive programming challenges—their high costs kept them out of reach for many developers. O3-pro changes that equation dramatically.

What’s the difference? o3-pro uses the same underlying architecture as o3, but it’s tuned to be more reliable, especially on complex tasks. Think of it as o3 with better long-range reasoning and fewer hallucinations—something OpenAI’s internal “4/4” reliability benchmark is designed to test. The model doesn’t just need to get a question right once, but four times in a row to pass.

Developers have been gravitating toward o1-pro for math, science, and coding tasks since its debut, and o3-pro aims to extend that dominance. It supports tools like web browsing, code execution, vision analysis, and memory—though OpenAI notes these features can make responses a bit slower. But for high-stakes queries or longer workflows, that’s a tradeoff many will welcome.

Reviewers in OpenAI’s expert evaluations consistently preferred o3-pro to both o1-pro and o3, calling out gains in accuracy, clarity, and instruction-following. It’s now considered the best-performing option across key domains like education, programming, business, and even writing help.

There are a few caveats. Temporary chats are currently disabled while OpenAI fixes a bug, and o3-pro doesn’t support image generation or Canvas yet. But for most serious use cases, especially on the dev side, those limitations won’t be dealbreakers.

So yes, o3-pro is slower. But it’s smarter, more capable—and a whole lot cheaper. For developers and power users, that’s a combo that’s hard to ignore.