Elon Musk's x.AI research lab has announced the preview of their first multimodal model, Grok-1.5 Vision (Grok-1.5V). This is unquestionably a remarkable show of progress for the startup that was just founded 9 months ago. This upgraded version of their large language model boasts enhanced capabilities, particularly in understanding and interacting with the physical world.

Grok 1.5V, can process a wide range of visual information, including documents, diagrams, charts, and photographs. It excels in multi-disciplinary reasoning and understanding spatial relationships in the physical world, outperforming its peers on x.AI's new RealWorldQA benchmark.

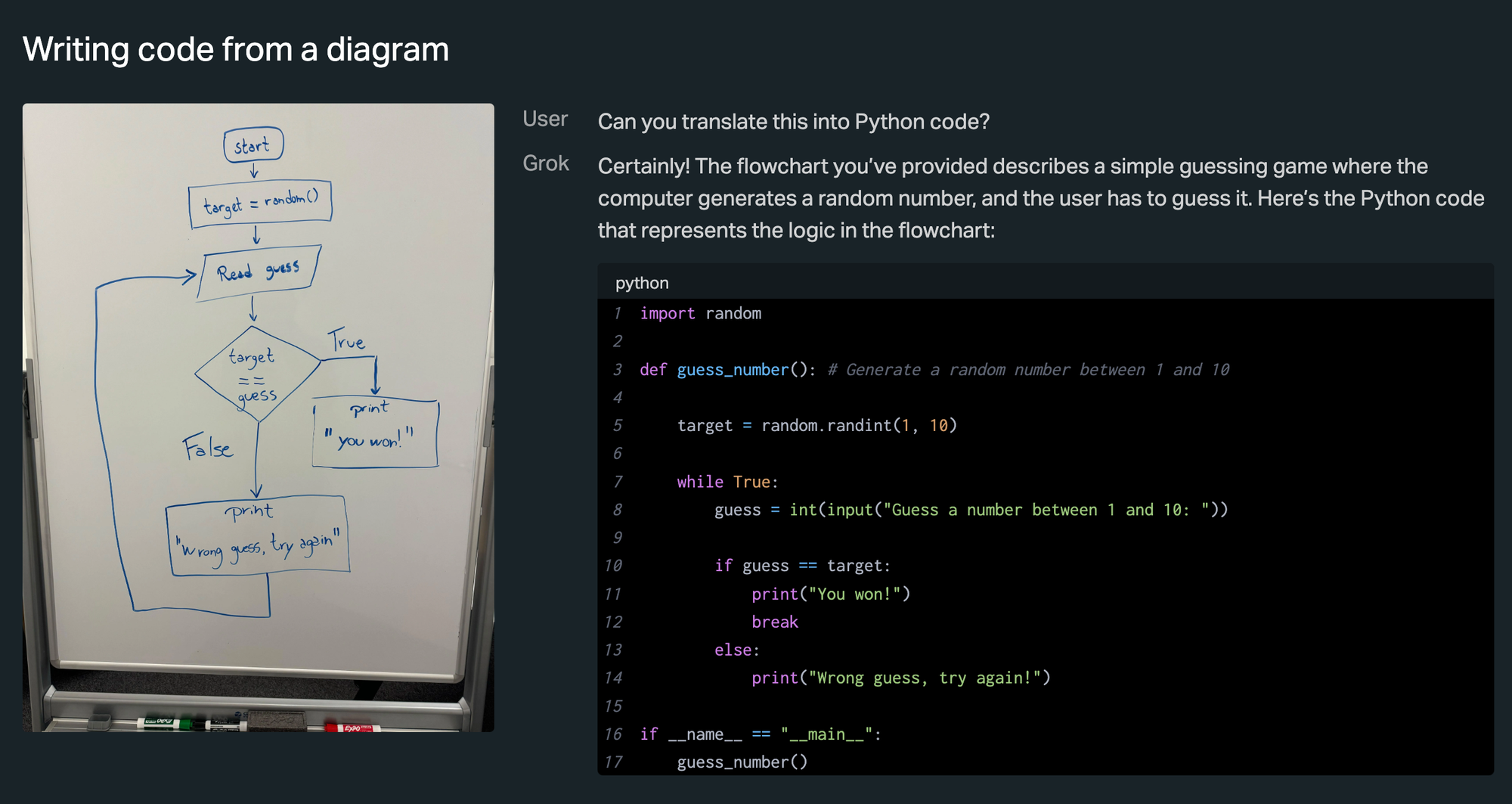

In a blog post, the startup showed off various applications of Grok-1.5V. From writing working code based on drawing to calculating calories from photo of a nutrition label, coming up with bedtime stories from children's drawings, explaining memes, converting tables to CSV format, and even offering advice on home maintenance issues like rotten wood on a deck, the model demonstrates remarkable versatility and practicality.

"Advancing both our multimodal understanding and generation capabilities are important steps in building beneficial AGI that can understand the universe," states the x.AI blog post announcing the preview. The research lab expresses excitement about releasing RealWorldQA to the community and plans to expand it as their multimodal models improve.

The introduction of RealWorldQA underscores x.AI's commitment to advancing AI's understanding of the physical world, a crucial step in developing useful real-world AI assistants. The benchmark (download link) contains over 760 images with question and answer pairs. While many examples in the benchmark may seem relatively easy for humans, they often present challenges for frontier models, highlighting the significance of Grok-1.5V's achievements.

Earlier this week, Meta also released its OpenEQA benchmark, designed to assess an AI model's understanding of physical spaces. The benchmark includes over 1,600 questions about real-world environments, testing the model's ability to recognize objects, reason spatially, and apply commonsense knowledge. It will be interesting to see how Grok-1.5V performs on this benchmark, especially given its touted capabilities in understanding the physical world.

x.AI emphasizes the importance of advancing both multimodal understanding and generation capabilities to build beneficial AGI. They plan to make significant improvements across various modalities, including images, audio, and video, in the coming months. The company says Grok-1.5V will be available soon to early testers and existing Grok users.