With deepfakes, misinformation campaigns, and algorithmic bias posing serious threats to electoral integrity, many people are rightfully concerned about AI's impact on the 2024 US presidential election. However, leading AI companies, including OpenAI, Microsoft, Google, and Anthropic, have taken measures to safeguard elections.

This article highlights how, over the past year, Anthropic has prioritized preventing misuse of its AI technology in ways that could undermine democratic processes. Here are five key steps they've taken to protect election integrity:

1. Prohibiting Political Campaigning and Lobbying

Anthropic updated its usage policy to explicitly prohibit political campaigning and lobbying. This includes banning the use of their AI for promoting specific candidates, parties, or issues, as well as for targeted political campaigns or soliciting votes and financial contributions. The company also forbids using their tools to generate misinformation about election laws, candidates, and related topics.

2. Fighting Election Misinformation

Anthropic has strict rules against using their AI to spread misinformation about election laws, candidates, or voting processes. The AI can't generate content targeting voting machines or obstructing vote counting or certification. By limiting Claude's outputs to text-only—no images, audio, or videos—Anthropic also mitigates the risk of election-related deepfakes.

3. Improved Detection and Enforcement

The company deployed sophisticated systems combining automated tools with human oversight to catch policy violations. These methods include modifying prompts on claude.ai, auditing API use cases, and in extreme instances, suspending user accounts. Anthropic also partnered with cloud providers like AWS and Google to detect misuse of their models on those platforms, creating a more comprehensive monitoring network.

4. Red-Teaming and Automated Evaluations

Anthropic regularly conducts "red team" exercises and works with external experts to probe their systems for vulnerabilities. This includes Policy Vulnerability Testing and automated evaluations to assess political parity, refusal rates for harmful queries, and robustness against misinformation. These tests, involving hundreds of prompts across multiple model variations, allow Anthropic to continuously refine their policies and technical safeguards.

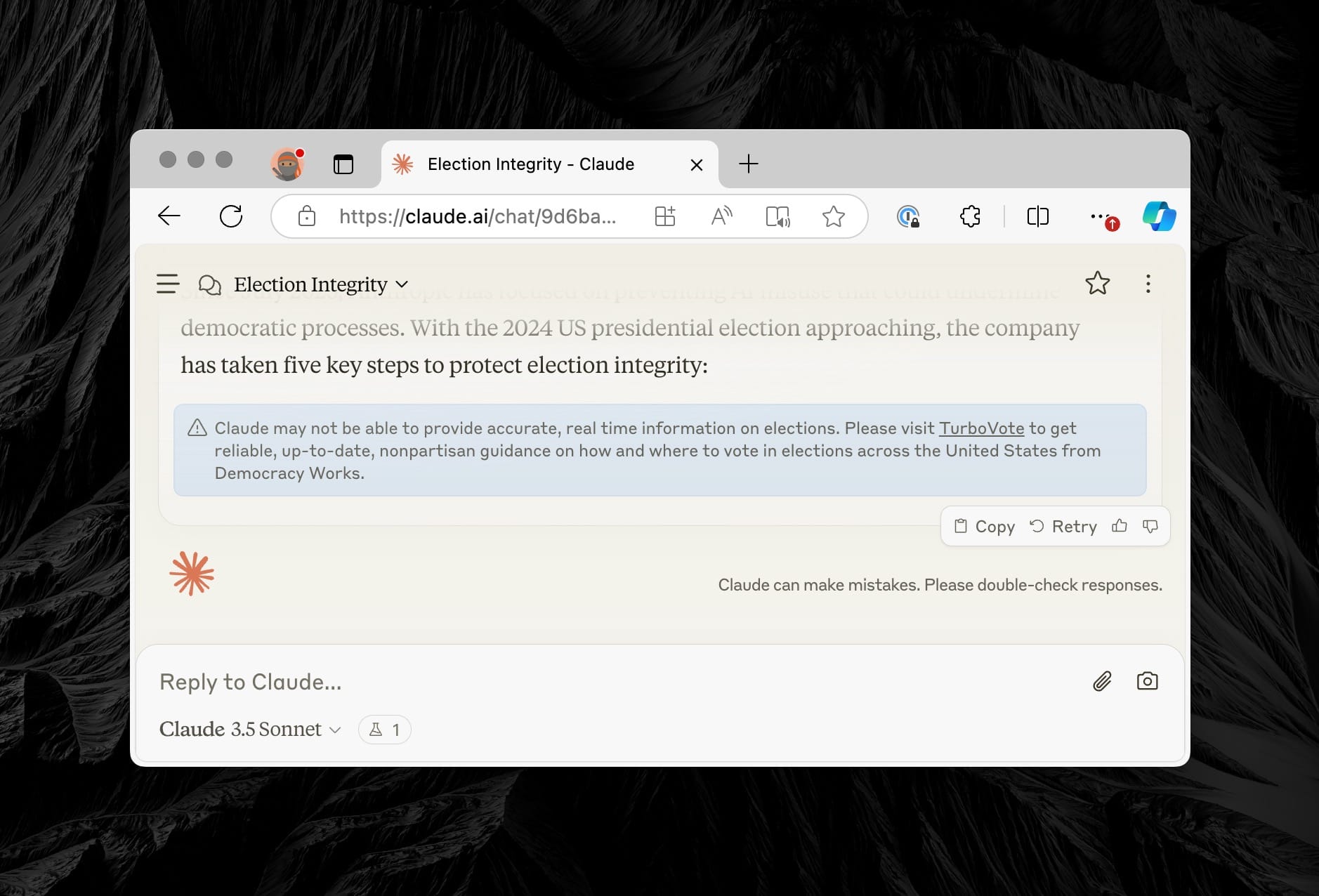

5. Redirecting to Authoritative Voting Information

Given that Claude doesn't have real-time election data, Anthropic has added a pop-up to redirect users asking election-related questions to accurate sources. For example, users are directed to TurboVote for up-to-date voting information, ensuring users receive reliable and timely guidance.

Anthropic's proactive measures during this critical election year demonstrate a company truly committed to responsible AI development, not just paying lip service. However, it's important to recognize that despite these efforts, bad actors continue to misuse AI tools, evolving their techniques in an ongoing arms race.

The fight against election interference is constant, with both AI companies and adversaries continuously adapting their strategies. Nevertheless, we should be encouraged by the progress made. The same AI technologies that pose risks also offer powerful tools to detect and combat misinformation.

This dynamic will likely be our reality moving forward. It underscores the need for investing in AI literacy as a society and fostering continued collaboration between tech companies, policymakers, and the public to ensure AI supports rather than undermines our democratic processes.