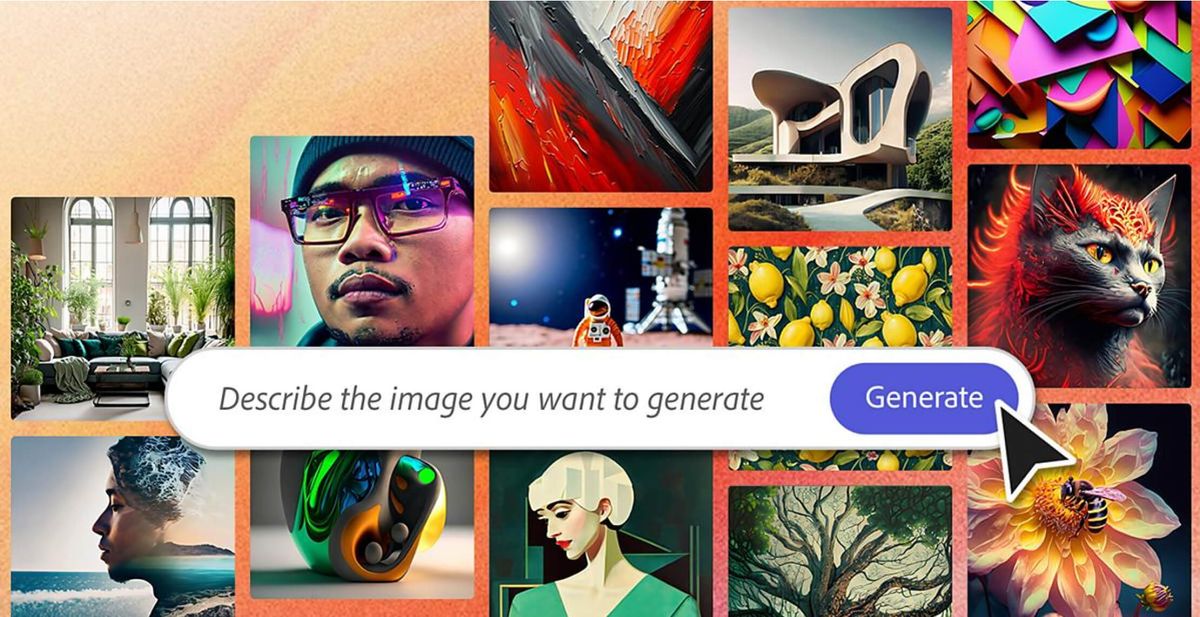

With the rise of powerful generative AI tools like Midjourney and Stable Diffusion, it's now easier than ever for anyone to create art, photos, videos and other content by simply typing in a text prompt. But this technology also raises potential issues around impersonating the style of real artists without their permission.

To address these concerns, Adobe has proposed that Congress establish a new Federal Anti-Impersonation Right (FAIR) Act specifically aimed at preventing the intentional misuse of AI tools to imitate an artist's personal style or likeness for commercial gain.

The intent is to provide artists with a legal recourse if their artistic style is closely copied by others using AI generative models. Adobe argues this could enable unfair competition and cause economic harm to artists whose work was part of training datasets for these AI systems.

Under the proposed law, liability would apply specifically to intentional impersonations using AI that are done for commercial purposes. Independent creations or accidental style similarities would not incur penalties. The right would also cover unauthorized use of someone's likeness.

On one hand, the FAIR Act could offer artists important protections in an age where their styles can be mimicked with unprecedented ease. It provides artists with a means to defend their livelihoods if their work is clearly being exploited by others for profit using AI.

On the other hand, it raises many questions around how it would work in practice. For example, the FAIR Act specifies that there would be no liability if the AI accidentally generates work similar in style to an existing artist or if the generative AI creator had no knowledge of the original artist's work. However, establishing intentionality could be complex and may require monitoring and storing users' prompts.

If the onus of proving intention falls on the artists claiming to be impersonated, this could necessitate invasive scrutiny of the accused’s creative process, potentially infringing on privacy rights. The accused could simply argue that any similarities were coincidental, putting the burden of proof on the original artist, which might be almost impossible to meet without some form of surveillance or data retention by the AI companies.

On the flip side, if companies were required to monitor and store user prompts to demonstrate intent, this could raise significant privacy and ethical concerns. Data retention opens up questions about how long such information should be stored, who has access to it, and what else it could be used for. Setting the right balance between protecting artists and enabling creative exploration will be key.

As part of its creator-first approach to AI, Adobe aims to give artists practical advantages with its Firefly generative models while also upholding accountability and transparency through initiatives like the Content Authenticity Initiative. Adobe states its vision is to build AI that enhances creativity rather than replaces it.

The company stresses their proposal focuses specifically on clear intentional imitation for commercial gain. Legitimately learning from and evolving an artist's style would not incur liability.

Adobe hopes to work with the creative community to refine solutions and explore the details around enforcing and implementing legislation that will both protect artists, and encourage further innovation.