San Francisco-based startup, Coval, has raised $3.3 million in seed funding to expand its AI agent evaluation platform. The company is bringing proven testing methodologies from the self-driving car industry to assess and improve the performance of AI voice and chat agents.

Key Points

- Coval closed a $3.3 million round led by MaC Venture Capital, joined by General Catalyst, Y Combinator, and others.

- Founder and CEO Brooke Hopkins was a tech lead at Waymo, where she saw parallels between self-driving systems and conversational AI.

- Coval simulates thousands of scenario variations to identify performance gaps in AI agents.

The connection between autonomous vehicles and AI agents might not seem obvious at first glance, but Coval's founder and CEO Brooke Hopkins sees clear parallels. "When I left Waymo, I realized a lot of these problems that we had at Waymo were exactly what the rest of the AI industry was facing," Hopkins told TechCrunch. Drawing from her experience leading Waymo's evaluation infrastructure team, Hopkins recognized that both technologies face similar challenges in ensuring reliable performance across countless possible scenarios.

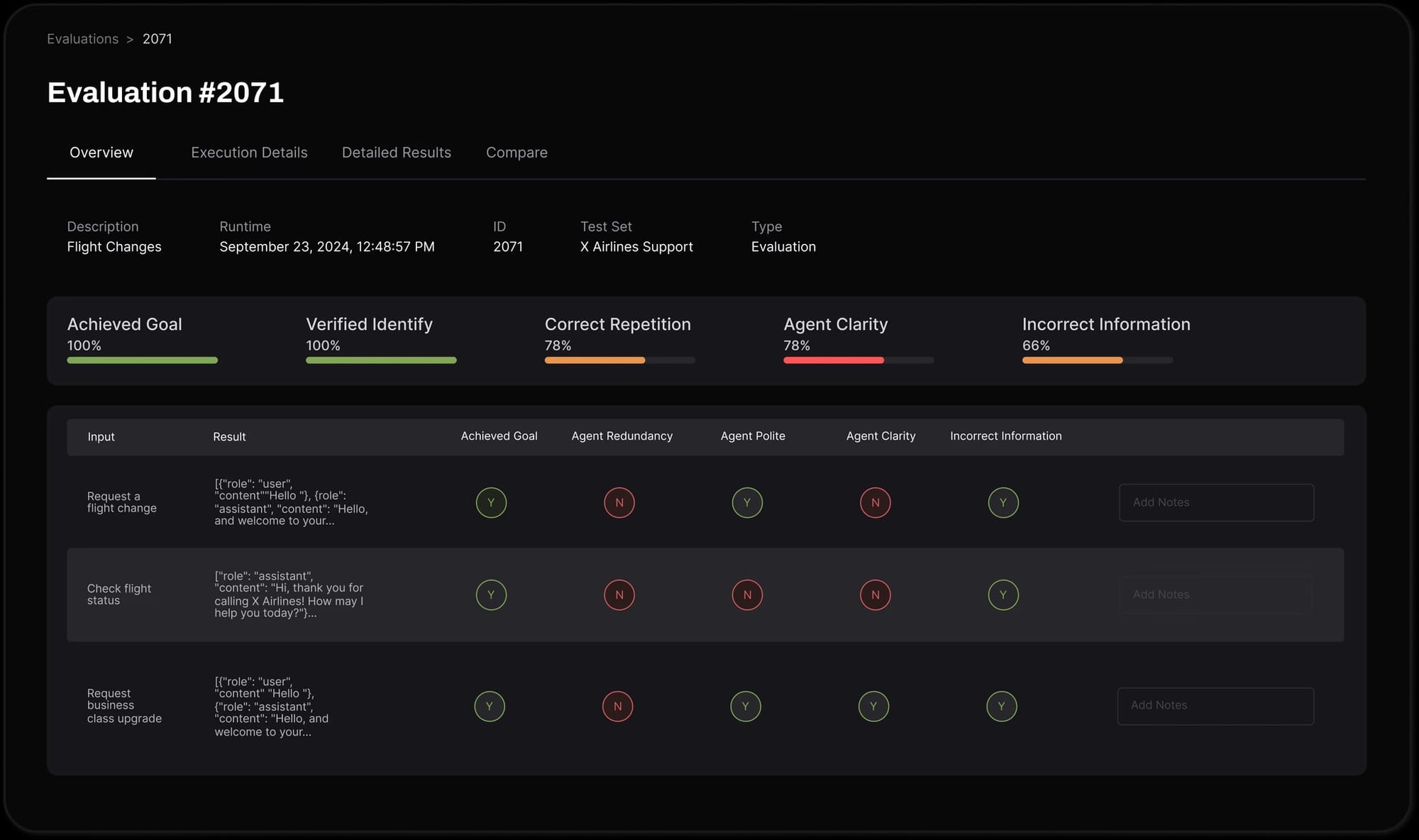

Coval's platform addresses a critical gap in the rapidly evolving AI agent landscape. As companies rush to deploy AI agents for customer service, sales, and other applications, they often lack robust testing infrastructure to ensure reliable performance. The platform can simulate thousands of scenarios simultaneously, from restaurant reservations to customer service interactions, providing comprehensive performance data and customizable evaluation metrics.

Coval’s seed round was led by MaC Venture Capital and included support from Y Combinator, General Catalyst, Fortitude Ventures, Pioneer Fund, and Lombard Street Ventures. It will primarily support engineering team expansion and product-market fit development. The company plans to extend its evaluation capabilities to include web-based agents and other AI applications.

For enterprises considering AI agent deployment, Coval's approach offers a potential solution to a common challenge: proving reliability. "One of the biggest blockers to agents being adopted by enterprises is them feeling confident that this isn't just a demo with smoke and mirrors," Hopkins explained. The platform's comprehensive testing and monitoring capabilities aim to provide that confidence through transparent, data-driven evaluation.

Since its public launch in October 2024, Coval has seen growing demand from companies eager to validate their AI agents' performance. The startup's early traction suggests a strong market need for sophisticated AI testing tools, particularly as organizations seek to deploy AI agents at scale while maintaining consistent performance and reliability.