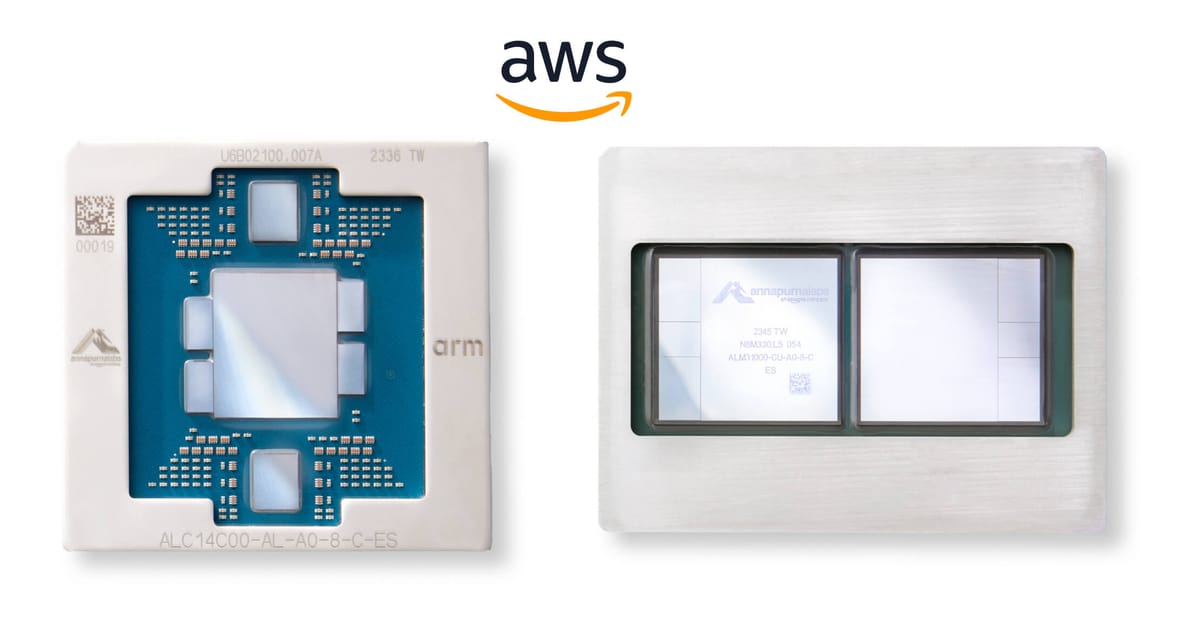

Amazon Web Services announced the latest iterations of its AWS-designed chips, Graviton4 and Trainium2, at its re:Invent conference today. The new chips aim to provide significant price-performance benefits and energy efficiency improvements for a range of customer workloads.

Graviton4 is AWS’s fourth-generation general purpose processor, building on the success of previous Graviton chips. It provides up to 30% better performance than its predecessor, alongside 50% more cores and 75% greater memory bandwidth.

These enhancements make Graviton4 well-suited for compute-intensive workloads like high-performance databases, analytics, and video encoding. AWS claims it is the most powerful and energy-efficient AWS processor for broad workloads.

The chip will be available in new memory-optimized R8g instances on Amazon EC2, supporting larger instance sizes up to 3x more vCPUs and memory versus current R7g instances. This allows customers like Nielsen, Pinterest, and Snowflake to run bigger in-memory databases, caches, and data lakes.

Over 50,000 customers already use Graviton across more than 150 instance types. AWS has continued rapid iteration, delivering four Graviton generations within five years. Each generation has provided leaps in price-performance and energy savings.

As foundation models and large language models push into the hundreds of billions or trillions of parameters, training these models requires specialized high performance hardware.

Trainium2 packs major advances over first-gen Trainium for this demanding task. It delivers up to 4x faster training performance and 3x more memory capacity per chip. Trainium2 also improves energy efficiency up to 2x.

The chips will be available in new Trn2 instances, where each instance houses 16 Trainium2 chips. AWS plans multi-instance clusters at an unprecedented scale - up to 100,000 Trainium2 chips in AWS’ EC2 UltraCluster product.

This level of scale can slash training times for large language models from months to weeks, helping customers accelerate innovation in generative AI applications. Companies like Anthropic, Databricks, Datadog, and SAP are already leveraging these new AWS-designed chips. For instance, Anthropic's AI assistant Claude, designed to be helpful and honest, benefits from the advanced capabilities of Trainium2. Databricks, a platform for unified data and AI, utilizes AWS Trainium for scaling and performance in training their AI models.

While proliferating its custom silicon, AWS stressed it will continue supporting third-party chips like AMD, Intel, and NVIDIA GPUs. It announced plans to make NVIDIA’s latest H100 GPUs available on EC2 and revealed a 16,000 chip development cluster for NVIDIA’s forthcoming H200 GPUs.

By blending internal innovation with partnerships, AWS retains its mantra of customer choice while pushing new boundaries in cloud performance, cost savings and sustainability. Graviton and Trainium’s rapid rise highlights AWS’s burgeoning capabilities in chip design.