GitHub's latest AI-powered tool, code scanning autofix, is now available in public beta for GitHub Advanced Security customers. This new feature helps developers remediate more than two-thirds of supported alerts with little or no editing, dramatically reducing time and effort spent on remediation.

Code scanning autofix, powered by GitHub Copilot and CodeQL (their code analysis engine that automates security checks), covers more than 90% of alert types in JavaScript, Typescript, Java, and Python. It provides code suggestions that remediate vulnerabilities with little or no editing, making it easier for developers to fix vulnerabilities as they code.

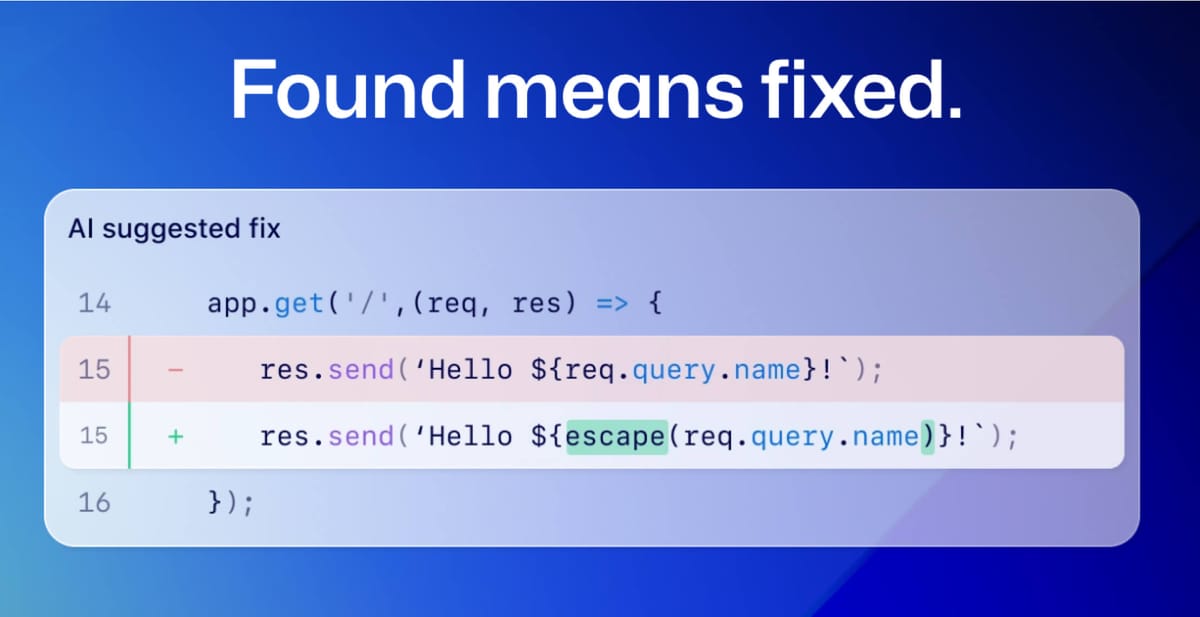

The tool works by leveraging the CodeQL engine and a combination of heuristics and GitHub Copilot APIs to generate code suggestions. When a vulnerability is discovered in a supported language, fix suggestions include a natural language explanation of the suggested fix, together with a preview of the code suggestion that the developer can accept, edit, or dismiss.

GitHub says their vision for application security is an environment where "found means fixed." By prioritizing the developer experience in GitHub Advanced Security, the company already helps teams remediate 7x faster than traditional security tools. Code scanning autofix is the next leap forward, helping organizations slow the growth of "application security debt" by making it easier for developers to fix vulnerabilities as they code.

GitHub plans to continue adding support for more languages, with C# and Go coming next. The company encourages users to join the autofix feedback and resources discussion to share their experiences and help guide further improvements to the autofix experience.

In a previous blog post, the GitHub Engineering team provided a peek under the hood of code scanning autofix, describing how it works and the evaluation framework used for testing and iteration. The post explains how the tool uses a large language model (LLM) to suggest code edits that fix the problem without changing the functionality of the code. It also outlines the pre- and post-processing heuristics used to support real-world complexity and overcome LLM limitations. It's definitley worth a read if you'd like to dive into the technical details of how this works.