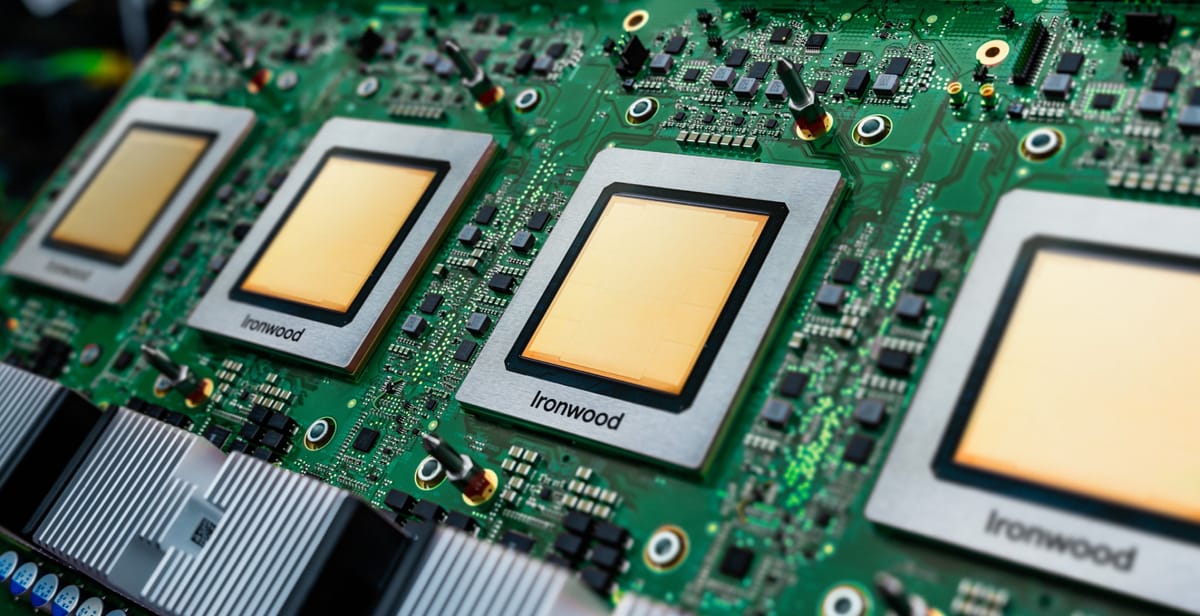

Google has unveiled Ironwood, its seventh-generation Tensor Processing Unit (TPU) and the first built specifically for inference.

Key Points:

- Ironwood is Google’s first TPU designed specifically for inference.

- Delivers 42.5 exaFLOPS of FP8 performance across 9,216 chips.

- Offers 2x the power efficiency of its predecessor, Trillium.

- Each chip packs 192 GB HBM and 4.6 PFLOPS of compute.

Inference has become the dominant workload in AI. It is what happens when a chatbot responds, a model generates an image, or an AI agent takes your prompt and executes a plan. Google says Ironwoord is ideal to handle the computational needs of newer “reasoning” language models like Gemini 2.5, Claude 3.7 Sonnet and o3.

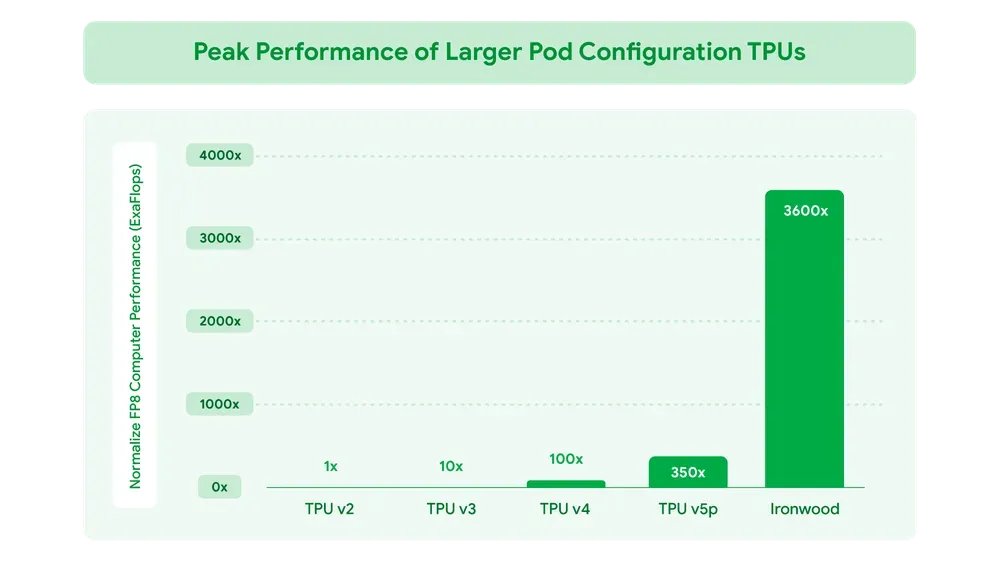

Ironwood comes in two configurations for Google Cloud customers: a 256-chip version for standard inference needs and a massive 9,216-chip configuration that delivers 42.5 exaflops of computing power. Each individual chip boasts peak compute of 4,614 teraflops, putting it in the same performance tier as NVIDIA's Blackwell B200 chips.

The technology represents several key advancements over previous generations. Ironwood provides twice the performance per watt compared to last year's Trillium TPUs and is nearly 30 times more power efficient than Google's first Cloud TPU from 2018. This efficiency focus addresses one of the enterprise world's most pressing AI concerns - the growing power demands of advanced models.

Memory capabilities have been substantially upgraded with 192GB of high-bandwidth memory (HBM) per chip - six times that of Trillium - with bandwidth reaching 7.2-7.4 terabytes per second. This expanded memory capacity enables processing larger models without frequent data transfers, critical for enterprises deploying sophisticated generative AI applications.

Google has enhanced the chip's Inter-Chip Interconnect (ICI) to 1.2 terabits per second bidirectional bandwidth, facilitating faster communication between chips for distributed processing at scale. The technology also features an improved SparseCore, a specialized accelerator for processing ultra-large embeddings used in ranking and recommendation systems.

It’s also tightly integrated into Google’s AI Hypercomputer architecture — a modular cluster design that blends custom chips, distributed systems software, and networking hardware. With DeepMind’s Pathways runtime, developers can scale workloads across tens of thousands of TPUs, using Ironwood not just as a chip but as part of a composable, enterprise-grade compute fabric.

Ironwood isn’t for sale — like previous TPUs, it will be offered exclusively through Google Cloud later this year. The company hasn't disclosed pricing, manufacturing details, or how much Ironwood capacity will be reserved for internal use versus cloud customers.

Ironwood brings a lot to the table—increased computation power, memory capacity, ICI networking advancements and reliability. The question remains whether Google can use its TPU advantage to claw back ground from NVIDIA, whose GPUs remain the industry standard.