Hume AI, a pioneer in the field of emotional AI, has announced a $50 million Series B funding round led by EQT Ventures. The company also unveiled its new flagship product, the Empathic Voice Interface (EVI), a first-of-its-kind conversational AI with emotional intelligence.

The funding round saw participation from Union Square Ventures, Nat Friedman & Daniel Gross, Metaplanet, Northwell Holdings, Comcast Ventures, and LG Technology Ventures. The capital infusion will be used to scale Hume's team, accelerate its AI research, and continue the development of its empathic voice interface.

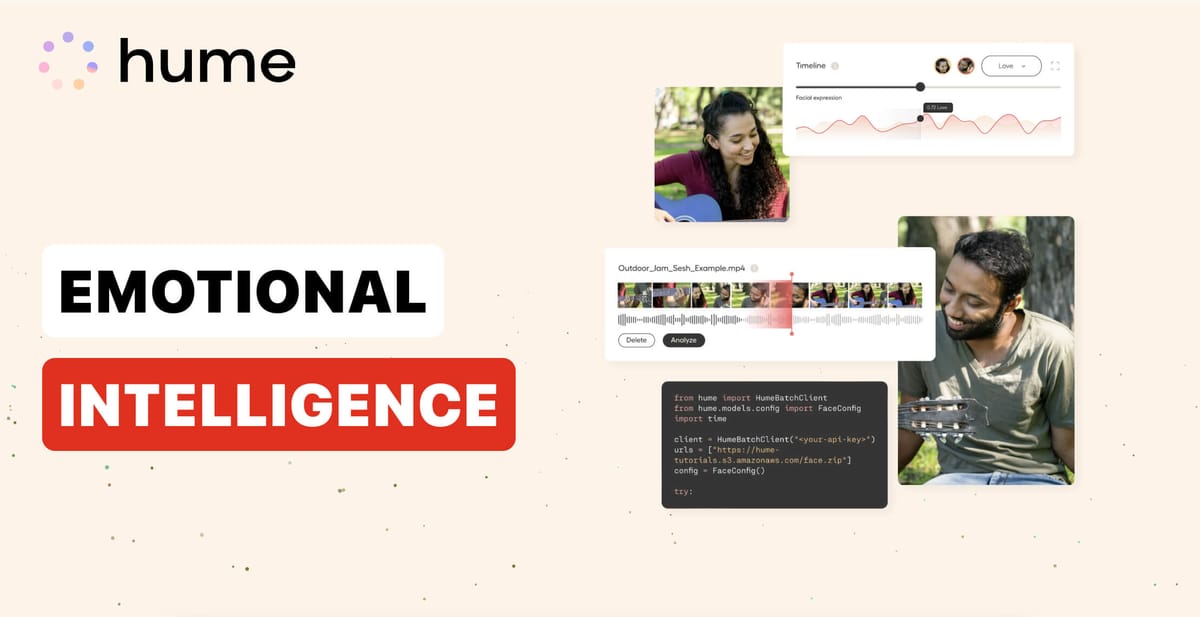

EVI is a universal voice interface, a single API for transcription, frontier large language models (LLMs), and text-to-speech. It uses a new form of multimodal generative AI that integrates LLMs with expression measures, which Hume refers to as an empathic large language model (eLLM). The eLLM enables EVI to adjust its language and tone of voice based on context and the user's emotional expressions. Developers will be able to integrate EVI into applications with just a few lines of code, with public availability slated for April.

Hume's approach to emotional AI is grounded in semantic space theory (SST), a data-driven framework for understanding emotion. Through extensive data collection and advanced statistical modeling, SST maps the full spectrum of human emotion, revealing its high-dimensional nature and the continuity between emotional states. This theoretical foundation informs the training of Hume's models and the development of its products.

EVI's features include end-of-turn detection, which uses the user's tone of voice for state-of-the-art end-of-turn detection, eliminating awkward overlaps. It also has interruptibility, stopping speaking when interrupted and starting listening, just like a human. EVI responds to expression, understanding the natural ups and downs in pitch & tone used to convey meaning beyond words. It also generates the right tone of voice to respond with natural, expressive speech.

Moreover, EVI is designed to learn from users' reactions to self-improve by optimizing for happiness and satisfaction. This alignment with the user's application makes EVI a human-like conversationalist.

The potential applications for Hume's technology are vast, spanning industries such as healthcare, customer service, and productivity tools. For example, the Icahn School of Medicine at Mount Sinai is using Hume's expression AI models to track mental health conditions in patients undergoing experimental deep brain stimulation treatments. Meanwhile, the productivity chatbot Dot leverages Hume's AI to provide context-aware emotional support to users.

The announcement follows a period of exciting growth for Hume. Over the past year, the company launched two key products: the Expression Measurement API, an advanced toolkit for measuring human emotional expression, and Custom Models, which uses transfer learning on those measurements to predict human preferences. Additionally, Hume grew its foundational databases to include naturalistic data from over a million diverse participants, doubled its headcount from 15 to 30 employees and published over eight academic articles in top journals.

Hume CEO and Chief Scientist Alan Cowen sees empathic AI as crucial to aligning artificial intelligence with human well-being. "By building AI that learns directly from proxies of human happiness, we're effectively teaching it to reconstruct human preferences from first principles and then update that knowledge with every new person it talks to and every new application it's embedded in."

Hume's technology is intriguing. As the company continues to push the boundaries of emotional intelligence in machines, it may well redefine the way we interact with technology, paving the way for more intuitive, empathetic, and ultimately human-centric AI experiences. Try their demo.