OpenAI has introduced a smaller, more affordable variation of its flagship AI model, GPT-4o, called GPT-4o mini. The goal is to balance capability and cost-efficiency, while expanding access to its powerful AI tools.

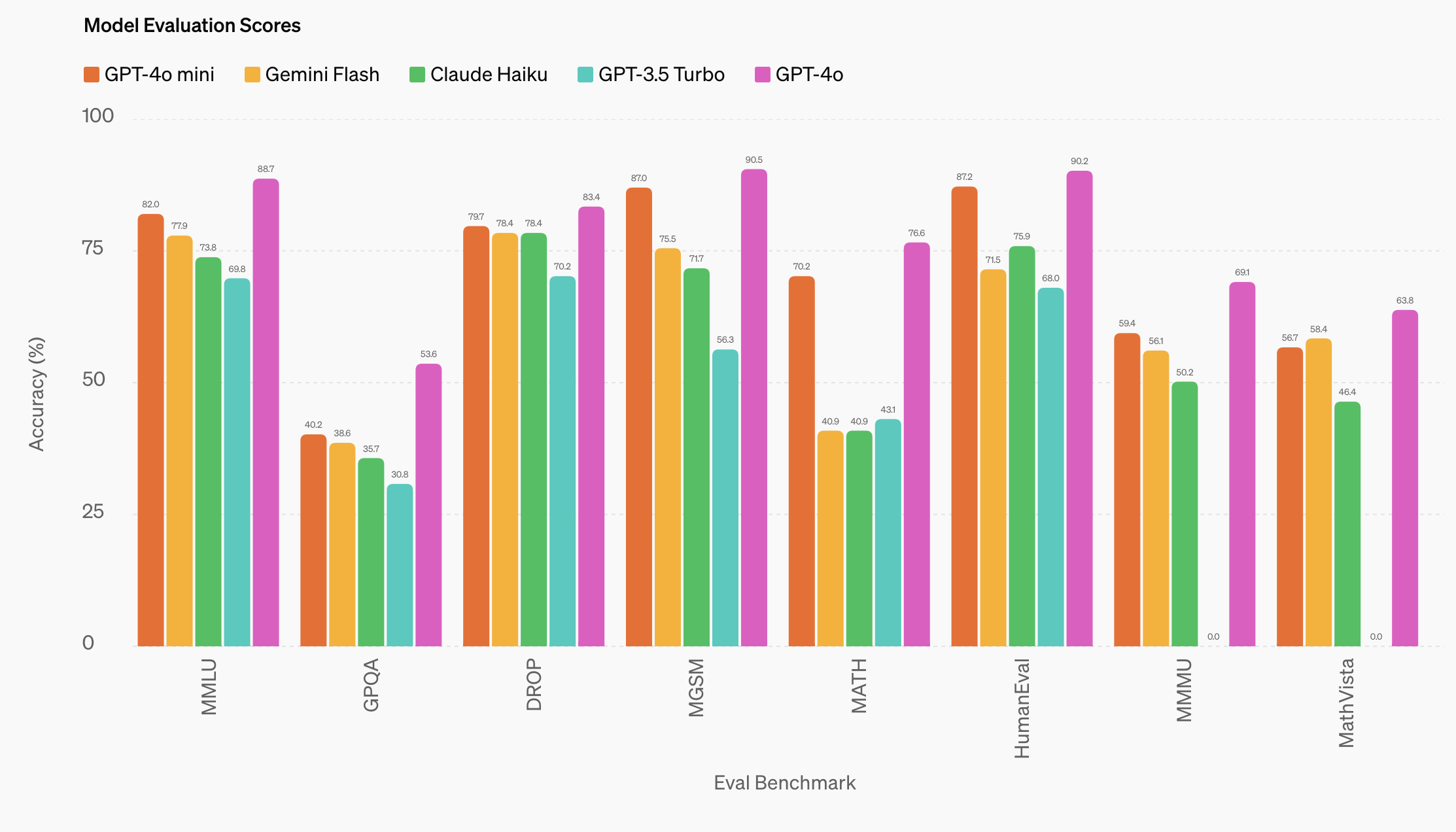

The new model scored 82% on the Measuring Massive Multitask Language Understanding (MMLU) benchmark, outperforming its predecessor GPT-3.5 Turbo (70%) and competing models like Claude 3 Haiku (75.2%) and Gemini 1.5 Flash (78.9%). However, it falls short of GPT-4o's 88.7% score.

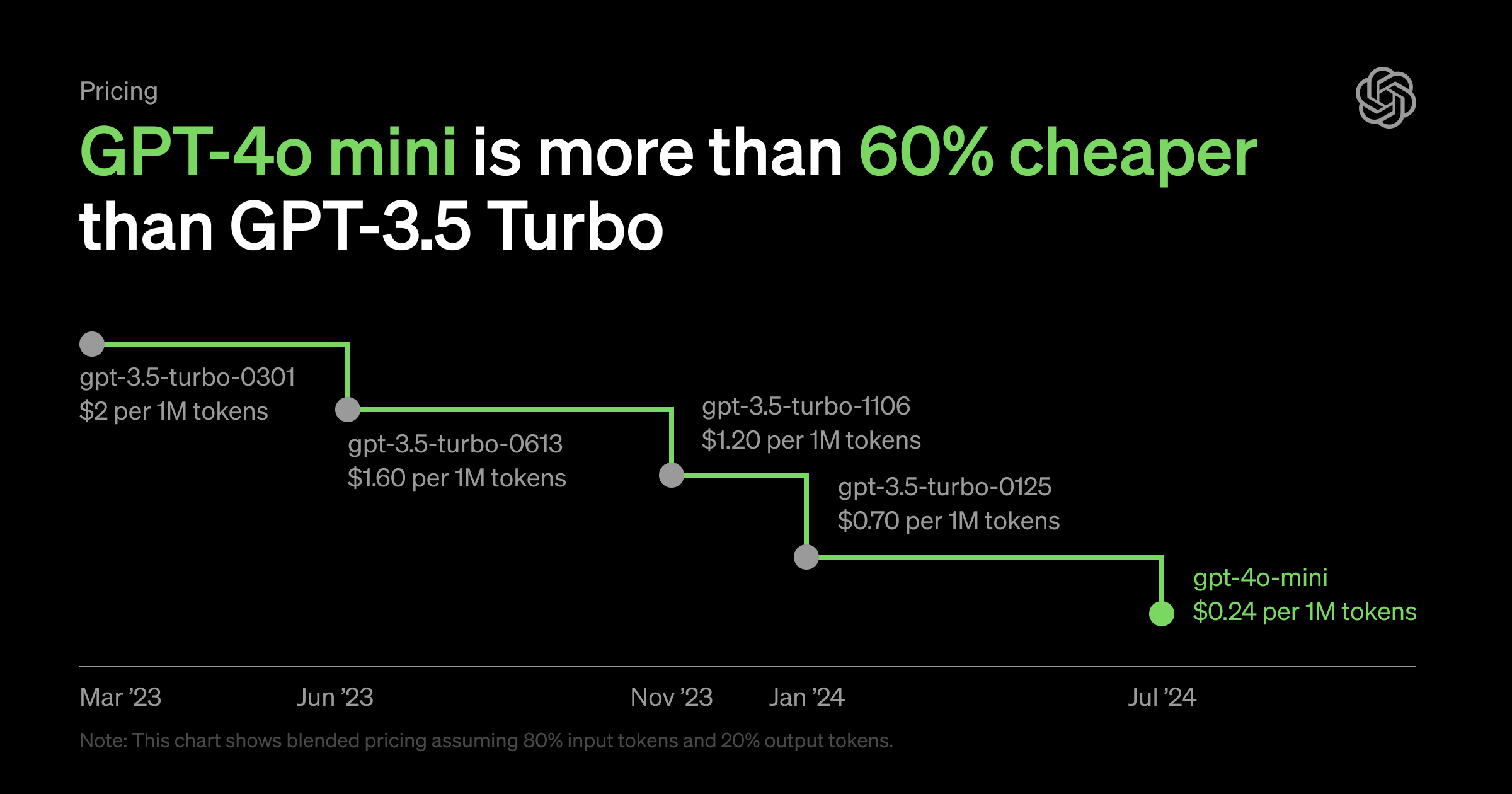

GPT-4o mini has a 128K context window and is priced at $0.15 per million input tokens and $0.60 per million output tokens. For comparison, Claude 3 Haiku offers a 200K context window and is priced at $0.25 per million input tokens and $1.25 per million output tokens.

While GPT-4o is technically a fully multimodal model, it currently only handles text and image inputs and outputs. OpenAI plans to add support for video and audio in the future.

Starting today, GPT-4o mini will be available to free ChatGPT users, ChatGPT Plus, and Team subscribers. Enterprise users will get access next week. The new model replaces GPT-3.5 Turbo in the ChatGPT interface, though developers can still access GPT-3.5 through the API for now.

Some companies have already put GPT-4o mini to use. Email startup Superhuman is using it to generate automated replies, while financial services startup Ramp is extracting information from receipts with the model.

OpenAI introduced a new safety approach called "instruction hierarchy" with GPT-4o mini. This feature aims to prioritize certain instructions over others, potentially reducing misuse and improving control over the AI's behavior.

The release of GPT-4o mini reflects OpenAI's strategy to maintain its market position while encouraging broader adoption of AI technology. By offering a smaller, cheaper model, OpenAI is aiming to attract developers who might otherwise turn to alternatives like Google’s Gemini 1.5 Flash or Anthropic’s Claude 3 Haiku.