OpenAI has added native image generation to GPT-4o. This is different from the image generation features that previously used a separate model called DALL·E. Now, users can generate and refine images inside the same conversation, using the same model, without context switching. The capability is available starting today in ChatGPT (Free, Plus, Pro, and Team) and Sora, with rollout to Enterprise, Education, and API users expected in the coming weeks.

Key Points:

- GPT-4o now supports image generation, built directly into ChatGPT and Sora.

- It handles photorealism, detailed text rendering, and multi-step instructions.

- Images can be refined through chat, maintaining consistency across iterations.

- Available today to all ChatGPT users; API and enterprise access coming soon.

That means the same AI you’re chatting with can also create visuals, taking your prompt and generating a consistent image that understands your context, preferences, and previous messages.

That iterative loop—refining images in the flow of conversation—is what makes this upgrade exciting. You don’t need to start over or adjust slider settings in a separate UI. You just keep chatting. And 4o remembers, building upon past instructions like a collaborative designer.

OpenAI says the model was trained on a joint distribution of images and text, which helps it understand not just what images look like, but how they relate to language—and each other. That training shows. 4o’s image generator can handle dense prompts with up to 20 distinct objects and relationships, a step up from previous models which start to fall apart after 5 or 6.

Safety remains a key concern. All generated images are embedded with C2PA metadata to indicate provenance. OpenAI says it is also using internal search tools and moderation systems to block harmful content and enforce strict restrictions around real people, nudity, and graphic imagery. The model has been evaluated for alignment using an internal reasoning model that references human-written safety specs.

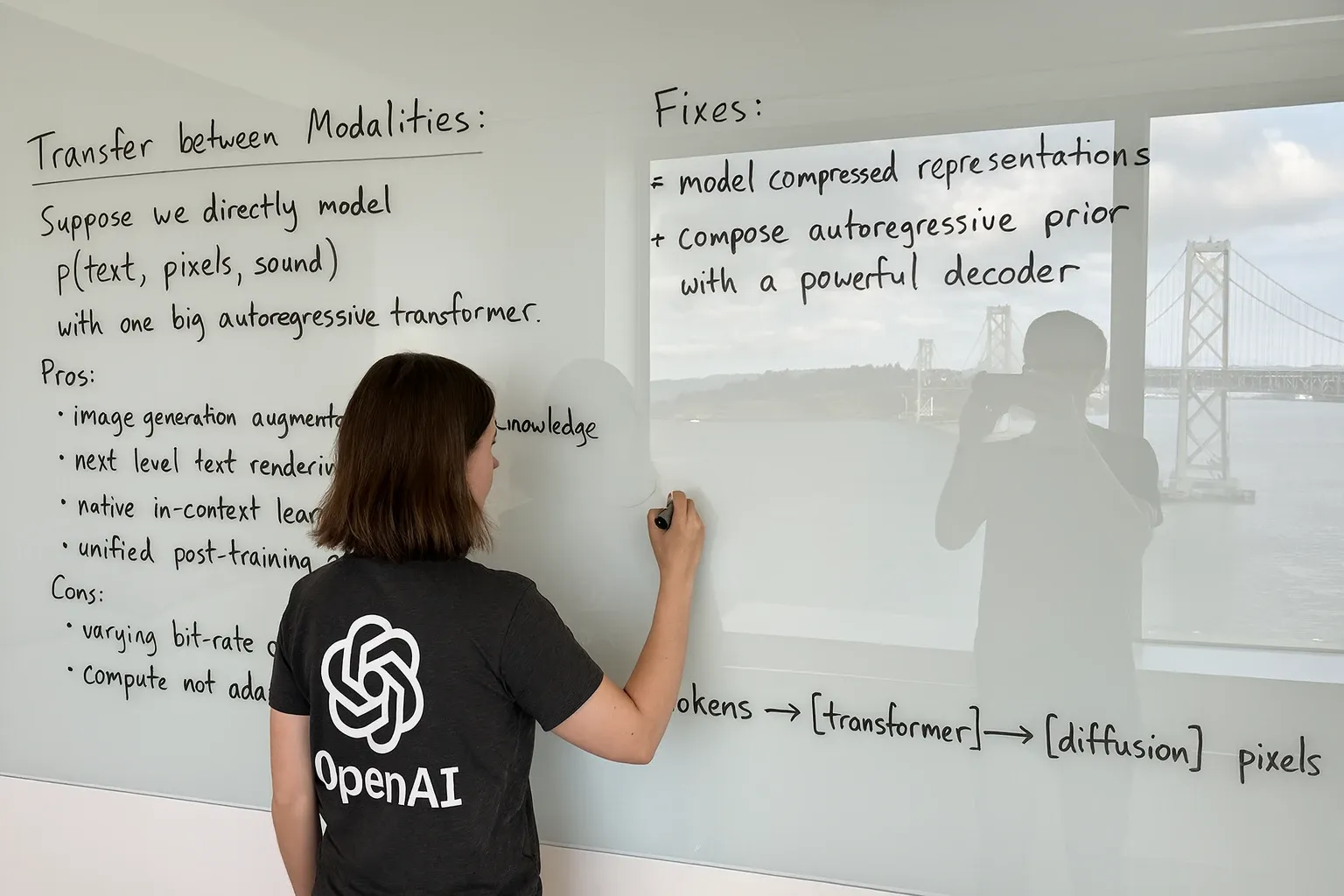

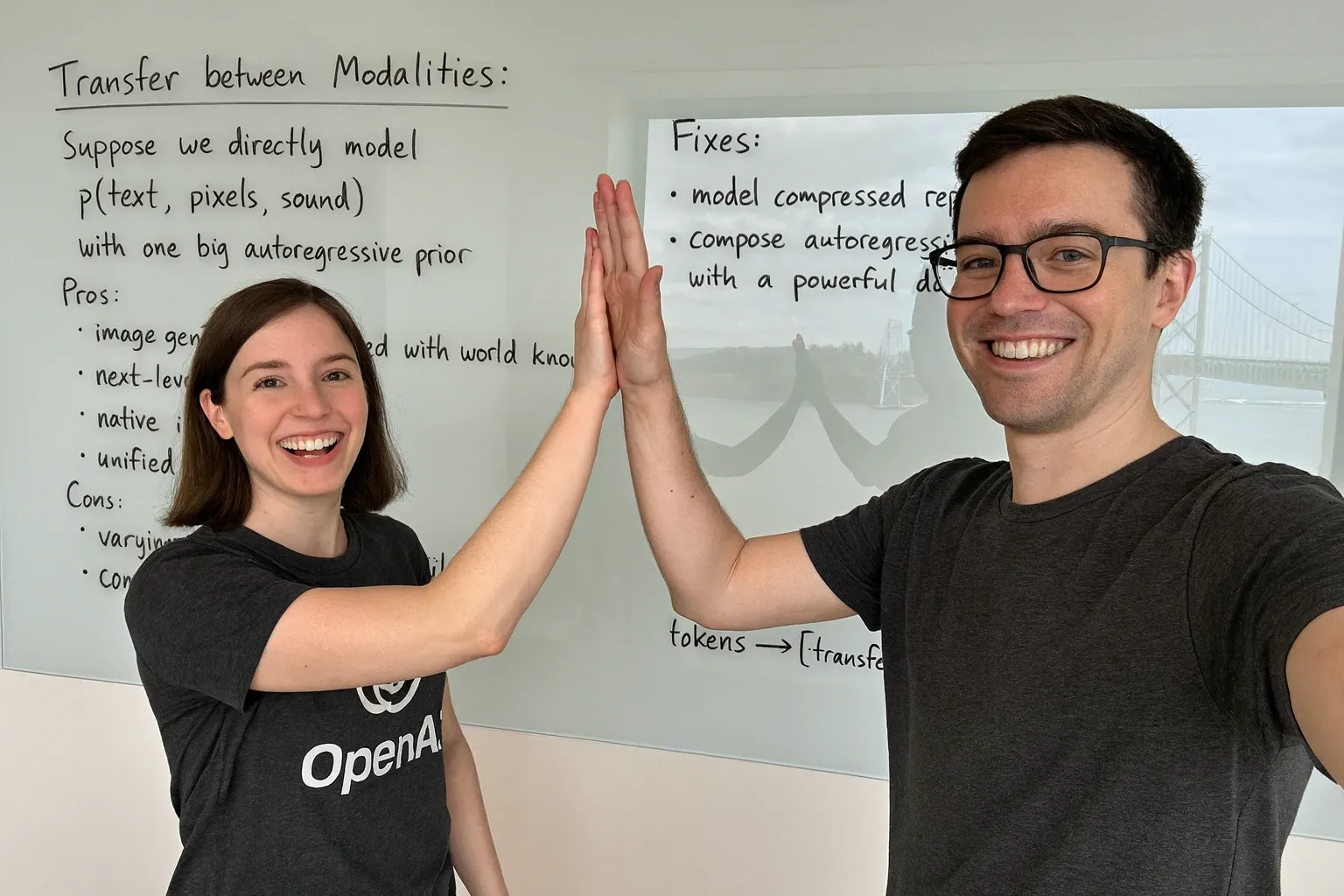

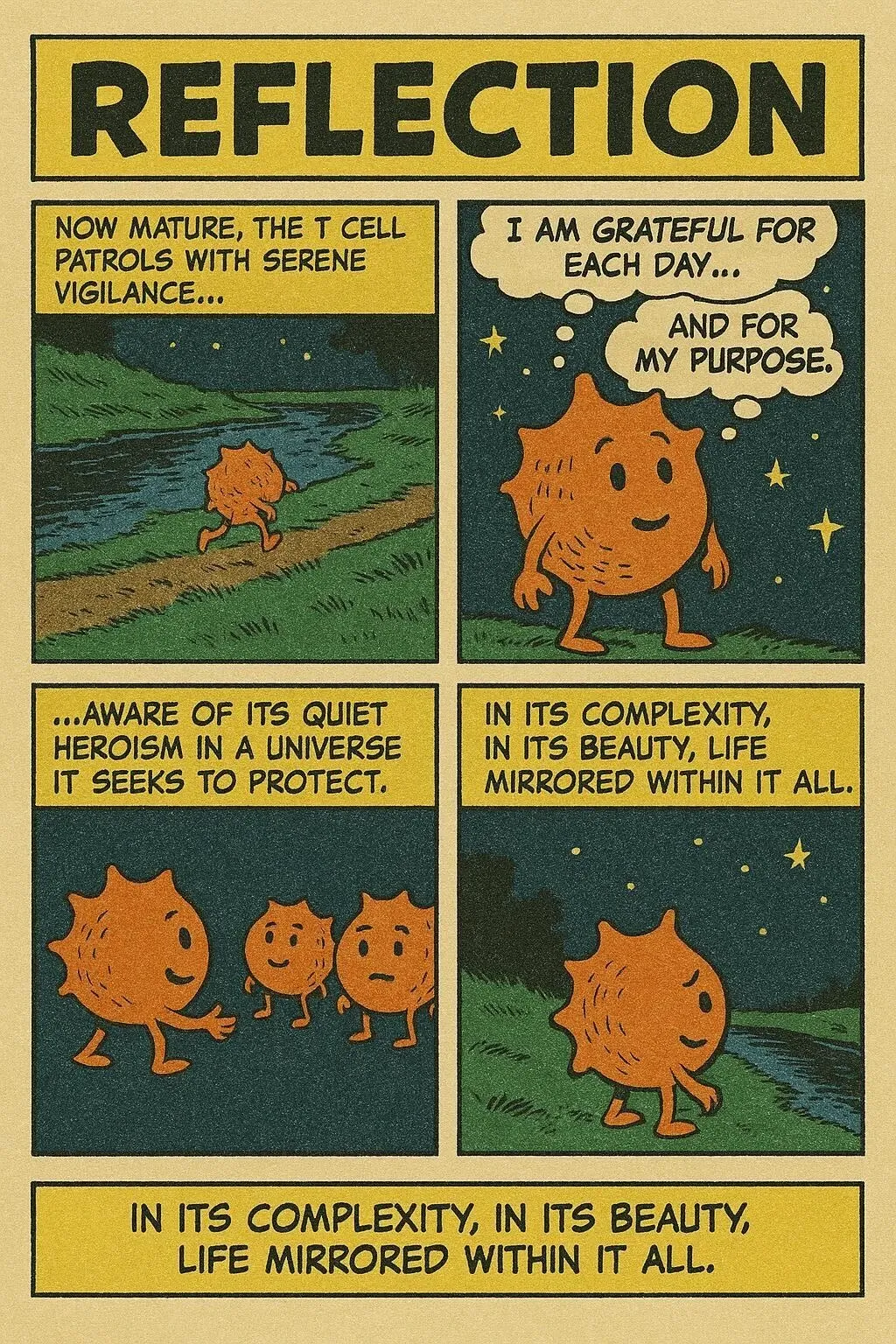

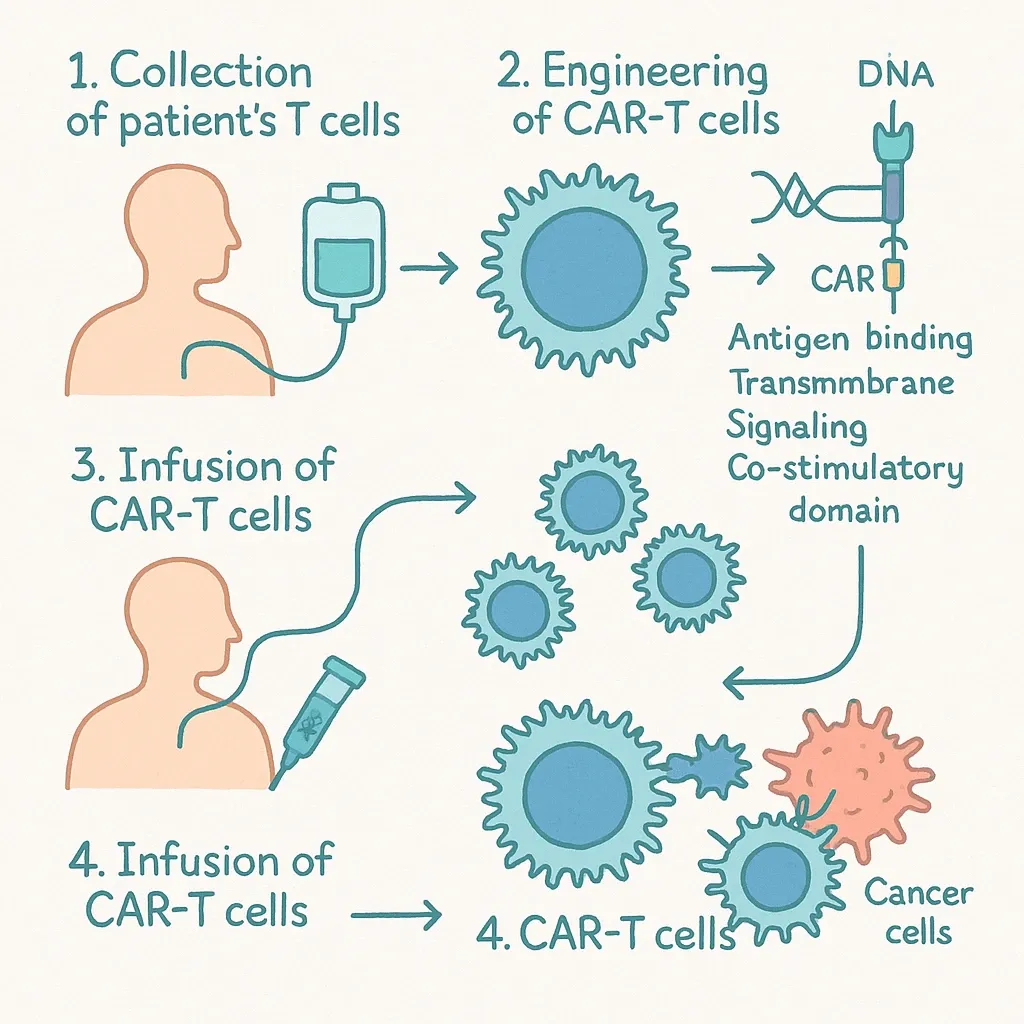

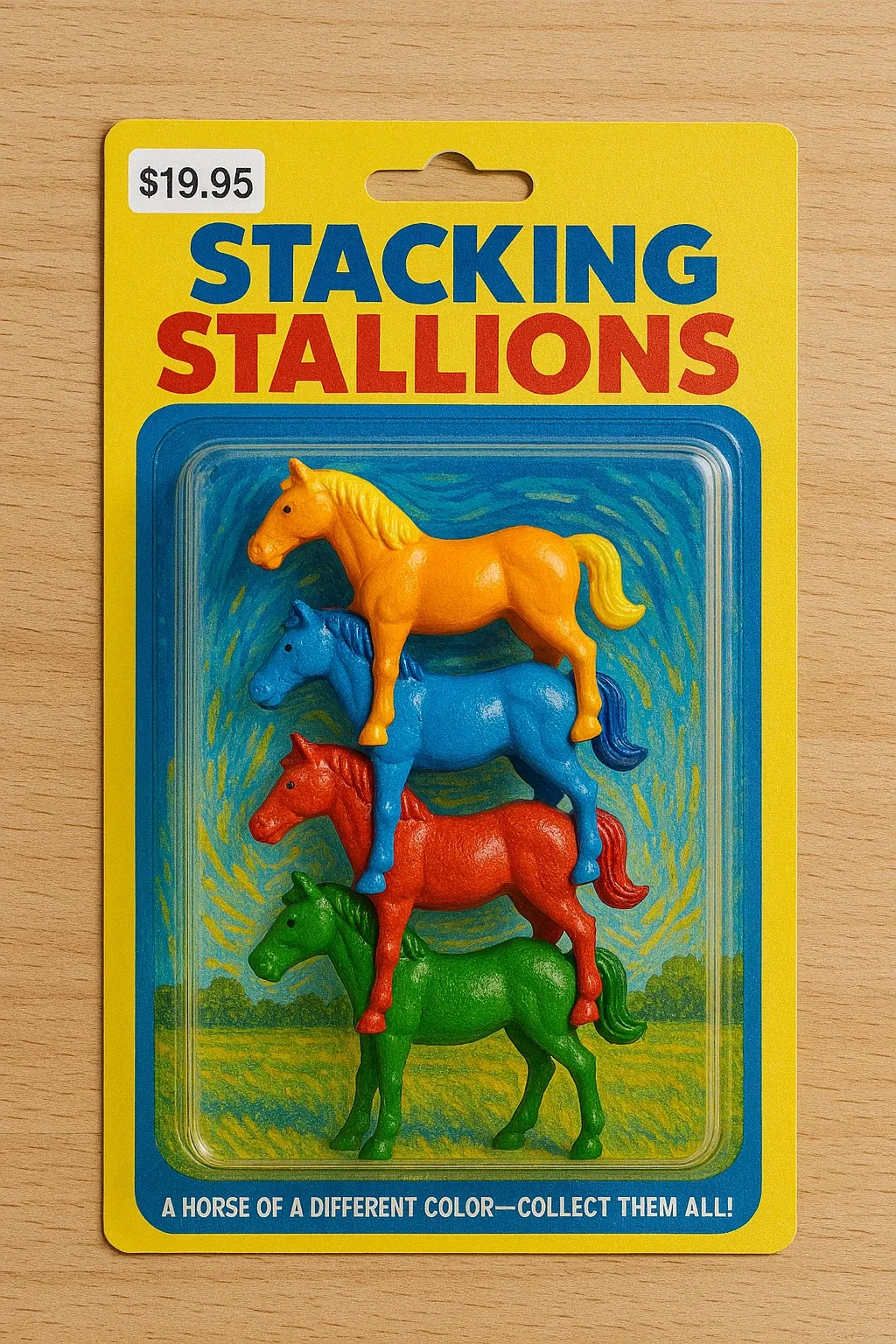

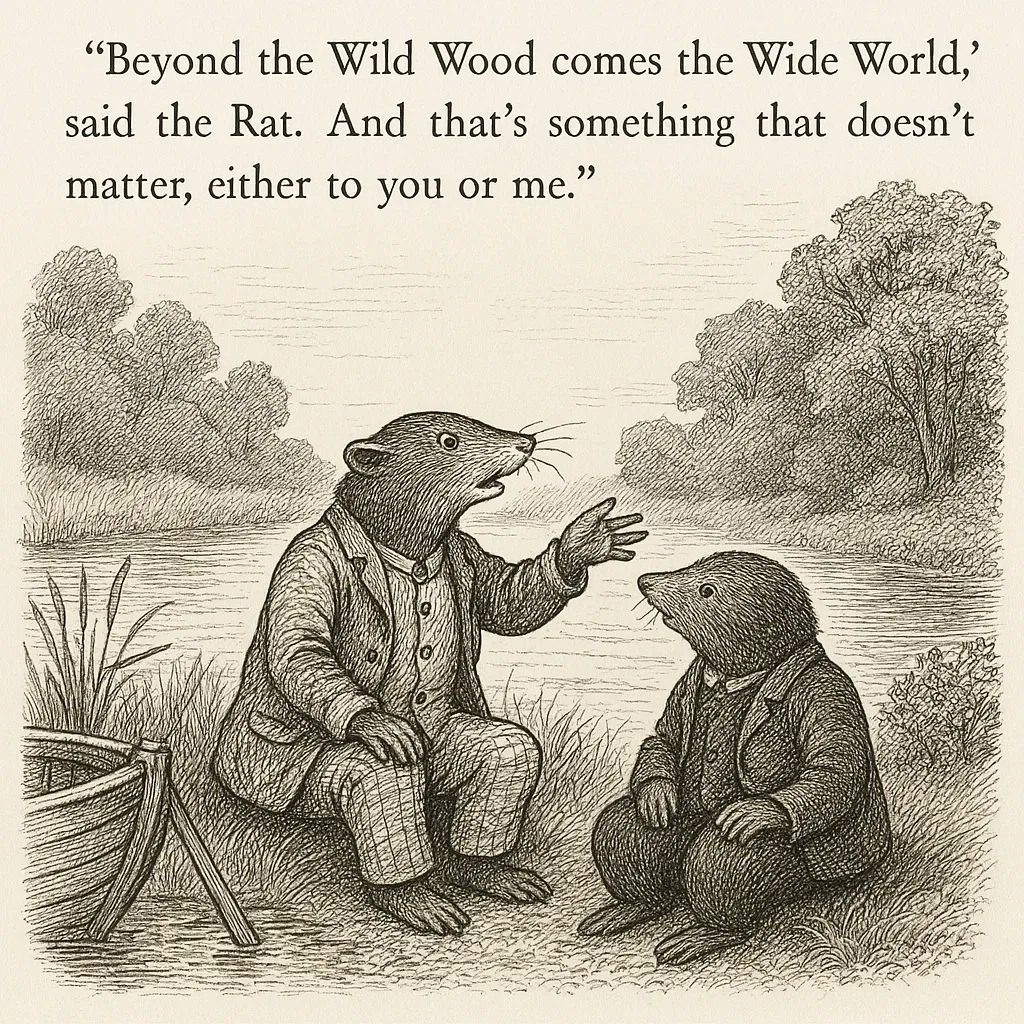

The company emphasizes that 4o’s image generator is more than a creative tool—it’s built for practical use. It can generate diagrams, restaurant menus, whiteboard illustrations, comic panels, and even design assets with a transparent background. GPT-4o leverages its understanding of language and visual relationships to produce output that aligns closely with user intent. The image generation model was trained on paired image-text data, with post-training techniques that boost its accuracy and consistency. Just take a look at the examples below:

Because of its higher fidelity, image generation takes longer to render—up to a minute in some cases. But the payoff is greater control, context awareness, and image quality. For developers, GPT-4o image generation will be accessible through the API in the coming weeks, and DALL·E remains available for users who prefer the older interface via a dedicated GPT.

GPT-4o’s image generation features likely won’t match tools like Midjourney or Photoshop any time soon. But it doesn’t need to. It brings image generation to where people already are—inside a conversation—and makes it feel natural, fluid, and useful.