When it comes to our everyday communication, the devil is in the details. It's the slight frown, a gentle nod, the inflection of our voice, or a quiet sigh that often carry as much weight as the words we say. These are the nuances that today's powerful large language models (LLMs) often miss. Recognizing this limitation, Microsoft's Project Rumi aims to incorporate paralinguistic input, such as intonation, gestures, and facial expressions, into prompt-based interactions with LLMs.

LLMs are no strangers to our modern digital landscape, providing value across diverse domains and turning natural language into a vital productivity tool. However, they have their shortfalls. Their effectiveness hinges on the quality and specificity of the user's input or prompt, a lexical entry that lacks the richness of human-to-human interaction. By ignoring paralinguistic cues, there is an increased risk of miscommunication, misunderstanding and inappropriate responses.

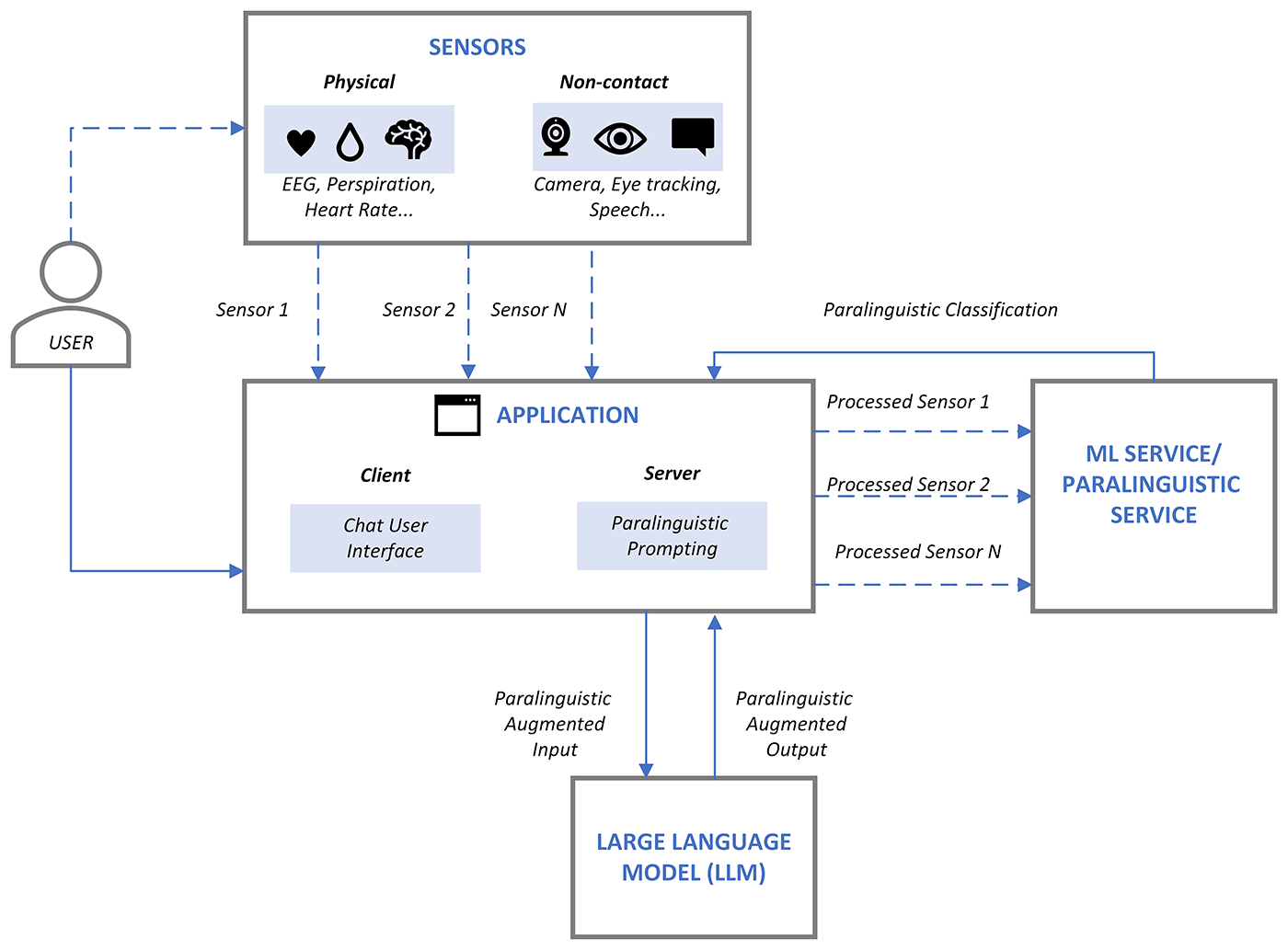

Project Rumi seeks to address this gap, developing a multimodal system that leverages separately trained vision and audio-based models to assess sentiment from cognitive and physiological data in real-time. The system extracts non-verbal cues from video and voice inputs in real-time, creating paralinguistic tokens that augment the standard lexical input to existing LLMs like GPT4. The result? A more nuanced understanding of a user's sentiment and intention, previously absent in text-based models.

For instance, consider a professional conversation where a manager wants to discuss team workload with an employee. The text-based input might be neutral, but the manager's tone and facial expressions could convey concern or empathy. Project Rumi's system can analyze these paralinguistic cues, enriching the prompt input and thus enabling a more effective interaction with the LLM.

At its core, Project Rumi points to an inspiring vision of the future—one where AI assistants can perceive and connect with humans not just through our words, but also through the multifaceted tapestry of cues we use to communicate, empathize and build shared understanding. As we enter the "AI as a copilot" era, enhancing LLMs' capacities to perceive the unspoken dimensions of language is critical. Ultimately, the project reflects an inspiring vision for the future - one where technology can truly understand us in all our complexity.

Microsoft's future plans for Project Rumi include improving the performance of existing models and incorporating additional signals, like heart rate variability derived from standard video, cognitive, and ambient sensing. These explorations paint a picture of a more dynamic, sensitive AI capable of understanding the complexity of human interaction.

While still in early research stages, Project Rumi's implications are profound. Its multimodal approach could enable LLMs to better grasp the nuances of human interaction and pave the way for more natural, satisfying communication between humans and AI.