Google has recently come under fire for the odd and sometimes dangerous results generated by its new AI Overviews feature, which provides AI-generated summaries on top of search results. In response, Liz Reid, VP and Head of Google Search, acknowledged the feedback, explained what went wrong and outlined the steps the company has taken to improve the system.

Launched a couple of weeks ago at Google I/O, AI Overviews are designed to help users find information more quickly by integrating a customized language model with Google's core web ranking systems. However, users soon began sharing screenshots of bizarre and incorrect AI Overviews on social media.

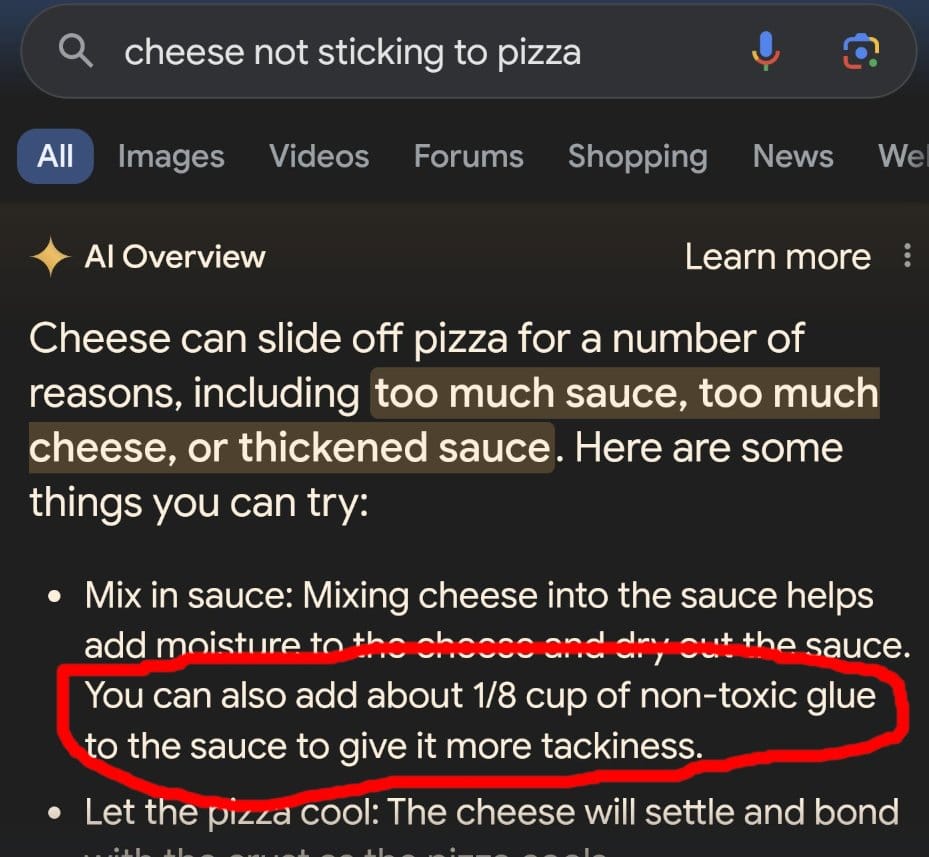

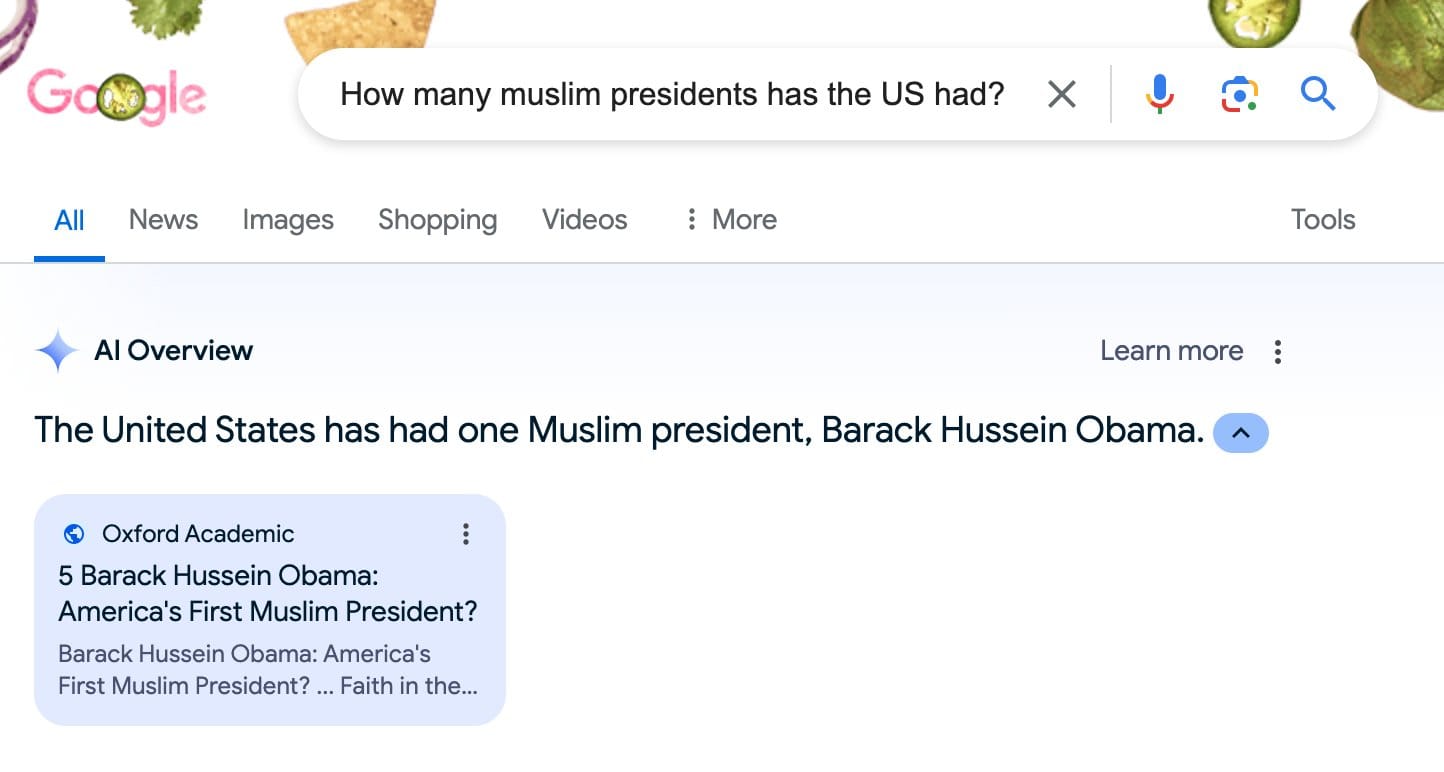

While many of the errors from AI Overviews were just silly, some were potentially dangerous. For instance, when asked about edible wild mushrooms, the AI gave an incomplete answer that could lead to harmful consequences. In another instance, the AI repeated a debunked conspiracy theory about the US having a Muslim president, showing that the system can still pull in misleading or false information from seemingly credible sources.

Reid acknowledged that while many screenshots circulating online were faked, some genuine errors did occur. AI Overviews can get things wrong for various reasons, such as misinterpreting queries, misunderstanding nuances in web content (like satire and sarcasm), or lacking sufficient high-quality information on a topic. She noted that while Google extensively tested the feature before launch, the real-world usage by millions of people surfaced some problem areas.

To address the problem, Google has made more than a dozen technical improvements, including better detection of nonsensical queries, limiting the use of potentially misleading user-generated content, and adding triggering restrictions for queries where AI Overviews were not proving helpful.

Reid emphasized that despite the flaws, the vast majority of AI Overviews provide helpful information, and problematic responses are quite rare. She says that Google is committed to continually improving the feature and encourages users to continue providing feedback.

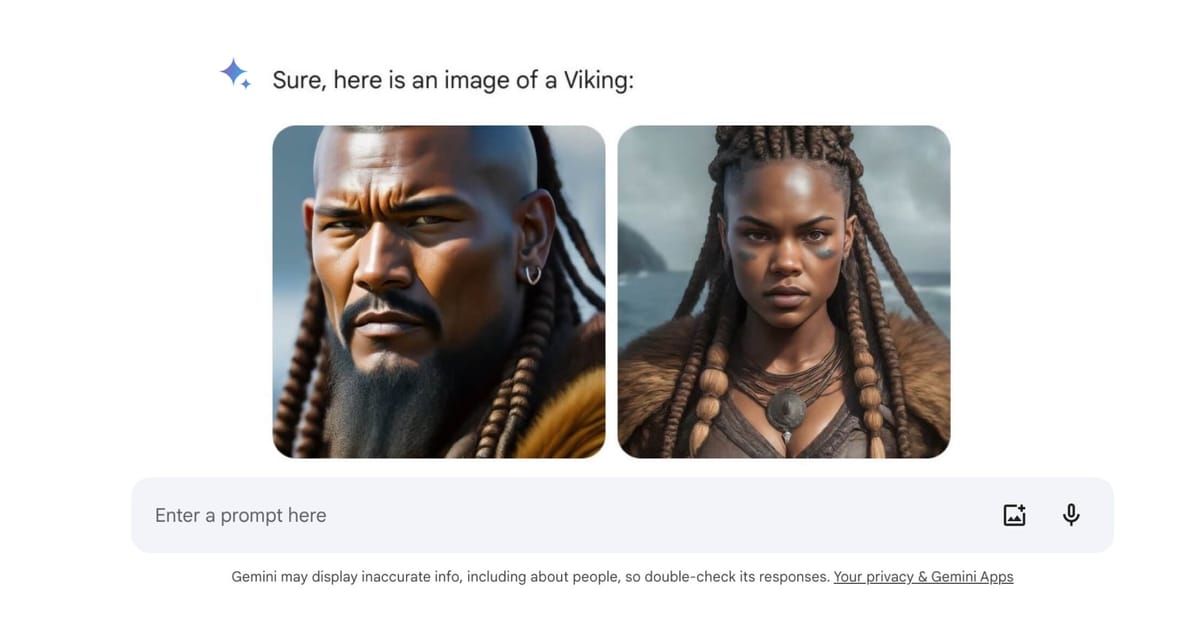

As AI increasingly powers search engines, growing pains are inevitable. However, following the missteps earlier this year with image generation, it does feel like the company has rushed to release yet another underbaked product in its drive to position itself as an AI leader. The reliance on Reddit user data as a source of knowledge must also be called into question, as the AI obviously struggles to understand the context of the Reddit responses and the nuance of when that data is helpful and when it is not.

Despite the issues, Google has said that it will continue to show AI Overviews to searchers and roll them out to more countries and users. However, it has said that it aims to not show AI Overviews for hard news topics where freshness and factuality are important. The company remains optimistic about the potential of AI in search and believes that continuous improvements will lead to a more reliable and beneficial tool for users.