Thinking Machines Claims 30x Cost Cut for Training AI Models

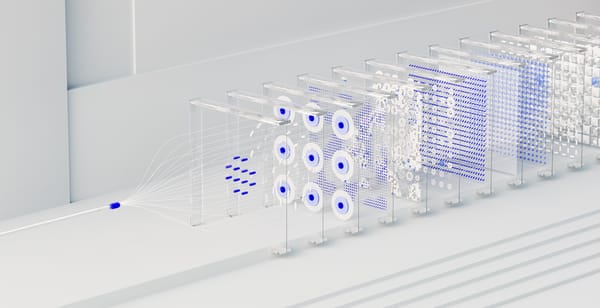

Thinking Machines published a detailed recipe for “on-policy distillation,” showing how per-token grading from a teacher model can push small students to strong math and assistant performance at a fraction of RL’s cost, with reproducible code via its Tinker SDK.