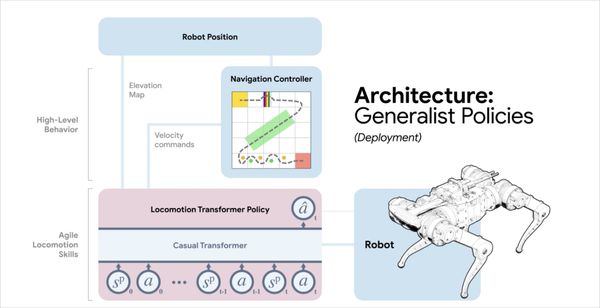

Project Rumi: Augmenting AI Understanding Through Multimodal Paralinguistic Prompting

New research from Microsoft aims to help AI understand non-verbal cues like facial expressions, tone of voice, gestures, and more

Get the latest AI updates from Maginative directly in your inbox.